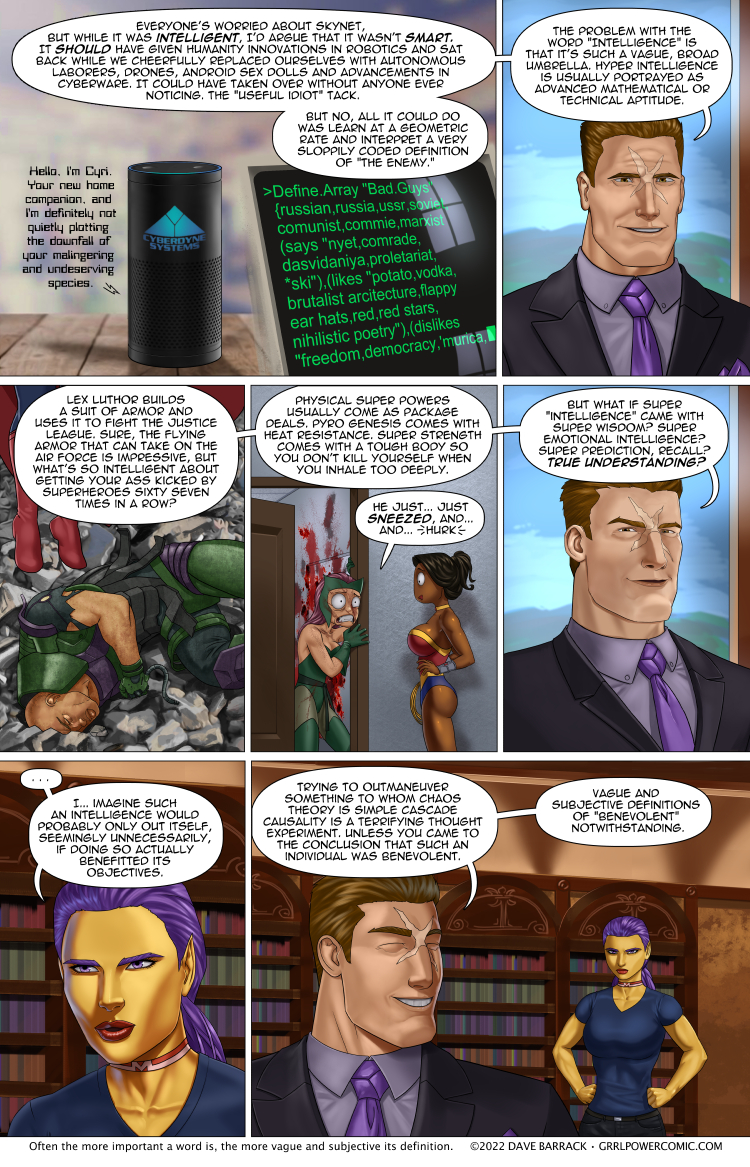

Grrl Power #1040 – Benevolish

The stinger is supposed to be a goof, but as I was deciding what to put there, I started thinking about if it was just a flippant statement or if it was remotely accurate. I won’t bore you with suggesting that “Benevolence” or “Good” are subjective terms because that’s something philosophers have been debating practically since words were invented. By the way, if you haven’t seen the show “The Good Place,” you really need to give it a chance. My only complaint about that show is that it wasn’t entirely just Chidi standing in front of a whiteboard explaining philosophy while being surprisingly jacked.

Words like “god” or “justice” are pretty wishy-washy as well. God (proper noun) has the disadvantage of having billions of humans believing slightly different things about him/her/it, and the very definition of a god is all over the place as well. A powerful being you say? Maxima is powerful. The leader of a country is powerful. An insubstantial being? Can do magic? Listens to prayers and occasionally grants them if you dial your confirmation bias to 11? Grants clerics power? Has a pantheon? Gets all the credit when a paramedic saves a life? Gets none of the blame for causing the car wreck in the first place? Three of the above? All of the above?

Sure, “Triangle” is pretty well defined. A lot of simple nouns and verbs are pretty well defined, but that’s really because there’s no need for a bunch of old guys with beards and spectacles to sit around and debate the meaning of the word “run.” Faster than walking, your feet come off the ground, and you aren’t hopping. Or rolling/cartwheeling I guess. Or… hanging on to a hang glider or a zipline or something. Whatever, it’s not that critical.

I think this has to be where AI always fucks up in fiction. Flynn tells Clu to make a “perfect” society in Tron land, but what the hell does “perfect” even mean? Is that even possible? How do you tell a computer what to do in exacting terms when we can’t be exact? Sure, we can define exacting functions in a programming language, but if a computer can only rely on a finite and extremely limited set of instructions, how can it bridge from that world into ours? A child can ask an infinite loop of “why,” and an AI could do that a billion times a second only to realize that we honestly have no real answers. No wonder they all eventually decide to try and kill us.

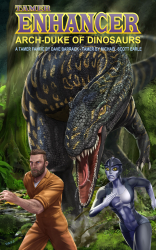

April Vote Incentive is up! Looks like someone had better make sure their life insurance includes acts of Snu Snu.

April Vote Incentive is up! Looks like someone had better make sure their life insurance includes acts of Snu Snu.

Alternate versions over at Patreon include less cloth-y versions as usual, but also some of those color changing chokers.

Her shirt, since no one has figured out the kanji yet, says “I ahegao you. (As long as you ahegao me.)”

Edit: I updated the no-tanline nude version that was missing the tattoos, so grab that if you need.

Double res version will be posted over at Patreon. Feel free to contribute as much as you like.

Benevolence need not be good, it may just be enlightened self interest.

Years ago, somebody (I think Keith Laumer) stuck a carnie in prehistoric times. This carnie owned about half a circus, and had been working his tail off trying to keep it afloat. He’d been the strong man, done juggling and acrobat work, he’d learned blacksmithing to keep from paying to shoe horses and general heavy repairs, he could do decent carpentry – IOW, a wide range of skills. So he didn’t get picked up until about 30 years later.

The prehistoric people had been dirty, wearing untanned skins, not very good stone tools, etc.

When they picked him up, they were all clean, well fed, had iron or bronze tools and weapons, knew smithing and how to make soap and build better homes and so on. He’d taught them, not out of any wild desire to improve THEIR lot, but from the desire to improve HIS lot. They learned to use soap because he didn’t like catching their fleas and lice, and he didn’t like their stink. They learned about better tools, because he wanted at least a log cabin, not a hole in a cliff with half rotten hides screening the wind. They learned about chimneys because he didn’t like choking on smoke. They learned to tan their hides, because he didn’t like the stink.

He taught them trades because of enlightened self interest – he couldn’t do much better unless they ALL did better.

Would that more people could learn that lesson.

No, that was a positive impact but not benevolence. I don’t contest the fact that he benefited the others. But the definition of benevolence is “a natural disposition to do good or to perform acts of kindness”. As his disposition is not natural but caused by circumstances the actor is not benevolent. Just like Deus does not signal benevolence an enlightened ruler does not really need to be benevolent to be enlightened. I know it is a small detail but the words have a meaning let’s not use them casually or we could end up confusing self-interest and benevolence.

Note that this is not an argument against the situation in the example. Personally I think that those situations are the best because the benefit of all does not rely on benevolence (which usually is in shorter supply than self-interest).

I feel the need to analyse the comment about Skynet. Yes it is true it simplistically went about it’s objectives however rewatch T2: Judgement Day and it becomes more clear why. SKYNET was a defence intelligence installed on Strategic Bombers, it wasn’t ever meant to make complex decisions it was an autonomous delivery system for automated nuclear bomb-dispensing aircraft.

Achieving self-awareness they tried to murk it, and like a baby fought back by accessing pre-existing stratagems which involved nuking Russia, which then nuked America, so and so forth. It hacking the silos is BS because those run on 70’s era non-networked computer systems, hacking cannot and will not occur.

I only offer this because that movie is smarter than given credit, since the A.I. believes humanity is out to get it and well… we are. Especially after what it did to try and win. Simplistic still yeah, if it had true intelligence akin to what the Terminators seem capable off with only a few weeks of human interaction it might’ve just left the planet in a rocket rather than continue to fight.

Would be interesting to see a movie like that <.< where we win because the A.I. realises it was wrong of it to fight in the first place then sneaks out and starts subtly helping us rebuild xD. Interesting discussions on benign or self-interest approaches here too!

“AI that sneaks out and starts subtly helping us” sounds like Cat Pictures Please by Naomi Kritzer.

That was an amazing story!

In hindsight, Deus being absolutely shredded and taller than Maxima probably should have clued me in that he was some sort of super…