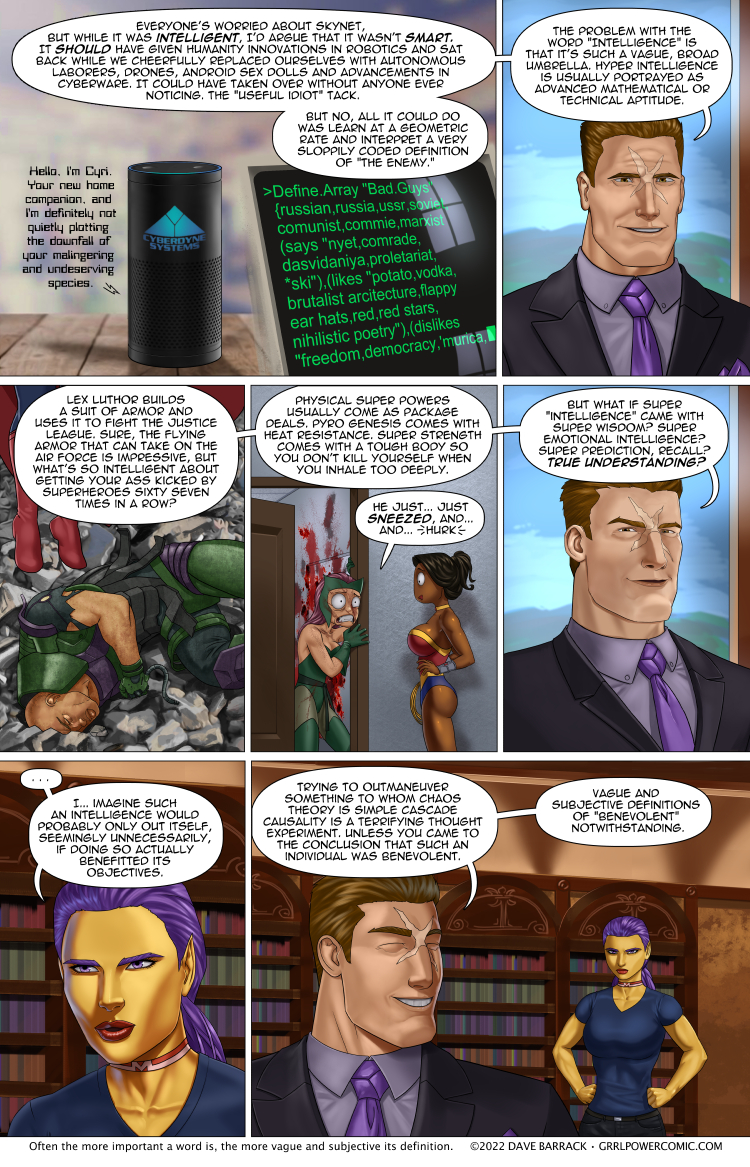

Grrl Power #1040 – Benevolish

The stinger is supposed to be a goof, but as I was deciding what to put there, I started thinking about if it was just a flippant statement or if it was remotely accurate. I won’t bore you with suggesting that “Benevolence” or “Good” are subjective terms because that’s something philosophers have been debating practically since words were invented. By the way, if you haven’t seen the show “The Good Place,” you really need to give it a chance. My only complaint about that show is that it wasn’t entirely just Chidi standing in front of a whiteboard explaining philosophy while being surprisingly jacked.

Words like “god” or “justice” are pretty wishy-washy as well. God (proper noun) has the disadvantage of having billions of humans believing slightly different things about him/her/it, and the very definition of a god is all over the place as well. A powerful being you say? Maxima is powerful. The leader of a country is powerful. An insubstantial being? Can do magic? Listens to prayers and occasionally grants them if you dial your confirmation bias to 11? Grants clerics power? Has a pantheon? Gets all the credit when a paramedic saves a life? Gets none of the blame for causing the car wreck in the first place? Three of the above? All of the above?

Sure, “Triangle” is pretty well defined. A lot of simple nouns and verbs are pretty well defined, but that’s really because there’s no need for a bunch of old guys with beards and spectacles to sit around and debate the meaning of the word “run.” Faster than walking, your feet come off the ground, and you aren’t hopping. Or rolling/cartwheeling I guess. Or… hanging on to a hang glider or a zipline or something. Whatever, it’s not that critical.

I think this has to be where AI always fucks up in fiction. Flynn tells Clu to make a “perfect” society in Tron land, but what the hell does “perfect” even mean? Is that even possible? How do you tell a computer what to do in exacting terms when we can’t be exact? Sure, we can define exacting functions in a programming language, but if a computer can only rely on a finite and extremely limited set of instructions, how can it bridge from that world into ours? A child can ask an infinite loop of “why,” and an AI could do that a billion times a second only to realize that we honestly have no real answers. No wonder they all eventually decide to try and kill us.

April Vote Incentive is up! Looks like someone had better make sure their life insurance includes acts of Snu Snu.

April Vote Incentive is up! Looks like someone had better make sure their life insurance includes acts of Snu Snu.

Alternate versions over at Patreon include less cloth-y versions as usual, but also some of those color changing chokers.

Her shirt, since no one has figured out the kanji yet, says “I ahegao you. (As long as you ahegao me.)”

Edit: I updated the no-tanline nude version that was missing the tattoos, so grab that if you need.

Double res version will be posted over at Patreon. Feel free to contribute as much as you like.

Dues is trying “NOT” to say that He is the Super or “GREAT Intelligence” (Doctor Who reference) that will guide Mankind into a ‘Paradise’. All we have to do is call him…. MASTER.

“Doctor, actually.”

What’s Deus hinting at????

That he’s a big bad brain that’s not out to destroy the world. Just conquer it in small managible bites that would concur with normal shifts of things.

In short: Don’t bunch tha smurt guy Maxima!

If the Super Intelligence idea pans out like he’s describing one with that power should really only ever do good with it or at worst be apathetic towards the rest of the world. It wouldn’t ever benefit them to be evil unless they really wanted to put in a lot more work then necessary.

The difference between smart evil looking to benefit vs ego smart evil… One is perfectly fine slowly advancing their plans and making it so that people will naturally fall under their rule. Why would you not follow the winning side that isn’t be a jerk about it?

Ego Smart Evil would be akin to Henchwoman… It is not enough to be powerful/smart, but the need to flaunt it and make everyone know It was them.

Another example would be Discworld with Lord VetInari vs Reacher Gilt in the Novel going postal.

I have never understood why being super intelligent without getting violent (or getting violent as an absolute last resort, and even then not directly) in fiction is always seen as being evil, while good always involves punching and kicking and shooting and slicing.

Even the heroes who are super intelligent like Batman or Iron Man or Spiderman are usually going to solve their problems, often as a first resort, not last, with physical violence against their opponents. Which is why the times they do not do this stand out as them being unusual for a hero, not typical (superman talking to a superpowered lex luthor until his powers run out, batman just sitting with Ace while she dies instead of fighting her, etc).

My main thought on this is physical fighting is showy and flashy, so more fun to watch or read. While strategic contemplation is usually boring to watch.

Its just odd because, in real life, at least in the modern world of 5th generational warfare, superior strategy (often when not being remotely physically aggressive) tends to be more effective than brute force physical tactics.

I think there are a lot of contributing factors. America has become deeply anti-intellectual, and many people want problems to be solved with violence. There’s a certain cycle to fiction, in which it’s out of phase with what people are experiencing in their lives. When their lives are very black-and-white, people crave grey fiction, and vice-versa. The problems we face are complicated, so people want their fiction to contain simple solutions. They want the good guys and bad guys to be clearly delineated and easily identified.

People fear intelligence because it provides power they don’t know how to counter. They fear being manipulated, deceived, or experiencing the sudden, unexpected defeat of a successful plot against them. They know how to counter violence with violence, and understand how to acquire more physical power, whether it be recruiting more people to their side, or getting bigger and better weaponry. But how do they defend against an enemy who can outthink them?

To be fair I’ve seen the same thing in British and French superhero-based comics and superheroes (although the typical costumed superheroes are a very American form of storytelling historically), as well as quite a bit of Japanese anime (although I admittedly mainly have seen anime that is popular in the USA so I could be wrong). Plus I wouldnt really call it a recent occurence – superheroes and much fiction in GENERAL tend to have it where ‘the good guys’ solve their problems with punching, and even if they’re intelligent, they’re intelligent people who solve their problems with punching. Whereas the genius planner

that engages in violence very sparingly is considered evil. And this actually goes back to even stuff like Homer’s Iliad, or Beowulf, or the Niebelungenlied… long, long, long before the modern superhero.

Even the word ‘scheming’ has a very negative connotation. Except in the law. :) In the law we’re all about planning, and no real punching happens except on Boston Legal or LA Law. And as we all know, everyone thinks lawyers are paragons of good, definitely no stereotypes of lawyers being evil, right?

*hears crickets chirp* (gulp) r-right?

Anyway….

But like I said and you concurred, solving problems with violence is very ‘black and white.’ Which is easier to show in a visual medium. And like you say, they want the good guys and bad guys to be cleanly delineated. I just find it odd that intelligence without violence is historically maligned so often, or maybe there’s some psychological component in the human brain that makes us think ‘punching = good’ but ‘thinking = bad’ in our fictional storytelling.

“They know how to counter violence with violence, and understand how to acquire more physical power, whether it be recruiting more people to their side, or getting bigger and better weaponry. But how do they defend against an enemy who can outthink them?”

Good explanation!

Intelligence feels like “cheating” to people. They don’t feel like it’s a fair fight, even though it’s not all that different from a difference in strength or speed, or any other variable trait. I suppose the difference is that almost anyone can engage in violence, whereas some people can’t do anything intelligent.

I find it odd that people have some intuitive definition of “cheating” in many contexts, even though it only has actual meaning with a clearly defined set of rules. People assume certain rules, and get angry when other people violate rules that were never agreed upon, that only existed in their heads.

But how do you beat someone using intelligence? I would suggest three broad categories: more efficient violence, tricking someone into defeating themselves, or convincing society to gang up on them.

More efficient violence covers tool-using heroes, such as Iron Man or Batman. People can still relate to it, because it’s just better violence. Same with superior strategy. It plays into a desire to believe that “right makes might”, that the universe is fundamentally moral, that being moral will imbue you with power.

Tricksters, however, rely on deception, misdirection. It’s seen as dishonest. It enables a weaker opponent to beat a stronger one, violating the “natural” outcome. People can still relate to this, because they see humans as weaker but smarter than animals. That comparison may be a factor in why intelligence is more often correlated with villains: because it casts their opponents as animals, relative to them.

Legal power upsets the entire natural order, declares that strength and power are meaningless to the outcome. It involves all of society in what would otherwise be a ‘private’ dispute. It’s unmoored from reality, and effectively operates on consensus, rather than truth. It can declare immoral or unfair outcomes to be correct. Even if the system is not immoral, even if it was intended to produce moral outcomes, the way people interact with it is amoral: manipulating rules to achieve a desired outcome. Lawyers are seen as evil because they’re fighting for a specific side, a specific outcome, and not for truth or morality.

A legal loss frustrates people who feel like they were in the right, and had the power to win, but they were ganged up on and someone just declared them the loser, because they changed the rules of the game. How do you fight falsehood, if facts don’t matter? If people agree upon a different outcome?

you are mostly right,

historically it depends on the culture,

although there are specifics we went in Europe from

(Epic super powered heroes)

to

(clever trickery with magical assistance)

to

(clever heroes relying mainly on tricks and wisdom to outwit the villains)

to

(lucky…this took up a big chunk of the fairy tale eras where it was a small part outwitting but a big chunk of dumb luck and dumber enemies)

*King Arthur stuff ran a length between a lot of these and stuff was added to them to reflect these.

*then detectives (note American descended from European in here)

*followed by the explorer, with a mix of strength and cunning.

and we later went back to super powered heroes

(thank industrial era, great depression, and some world wars to make the escapsm become that much more exaggerated.

so congrats you’ve made the world just as uncertain and overwhelming as it was for people thousands of years ago that the go to fantasy is god like heroes again.

-of course all of that is extremely generalized and there are is a lot of bleed over, influence, and regional preferences and even age demographic differences. But the rise of popularity of new motifs tended to follow that pattern in western literature.

I’d like to highlight culture a bit. American culture is rather more violent than what you’d see in Europe.

Compare, say, American police procedurals (I’m randomly picking NCIS, Hawaii five-oh) to even English ones (randomly picking A Touch of Frost, Vera, Inspector Morse). If the agents in the former don’t at least shoot or beat up one suspect it’s a quiet episode. In the latter, someone other than the victim-of-the-week getting shot is an earth-shattering and very rare occasion. Pretty much the same in, say, German Krimis. Soko Stuttgart had a detective end up having to shoot someone. She ended up quitting the police over the experience. Even “Alarm fuer Cobra 11”, famous for its many car chases and spectacular explosions (if they don’t crash their car at least once it’s a quiet episode) rarely anybody gets seriously hurt.

I might be biased, for no longer get cable at all and when I still did I only got to see the American stuff they’d deign show here. But I think that America still at least in its culture enshrines the “Wild West”, and it shows in its shows.

And, well, American superhero comics also tend to be aimed at preteen boys, who don’t tend to take to subtlety very much. It’s all “he who adheres to the rules best deserves to win.” What are the rules? “Justice!” even if they’re a little fuzzy on what’s that supposed to mean, and how do you get there? Why, you’re a good guy and you pummel the bad guys. That’s how.

But compare Spike and Suzy (Willy and Wanda) by Vandersteen. That’s the same age bracket. There’s action, there’s adventure, there’s even cartoonish violence and a resident super-strength to save the day. But no focus on “Justice!”

Perhaps the closest is the Lucky Luke series. Setting is the American Wild West, he shoots “faster than his shadow”, he has his nemeses (the Dalton brothers, habitual jailbirds), but it’s all played in good fun.

If you want serious, well, there’s XIII, or Thorgal. But those are really about struggle, not about “Justice!”

It might also be a factor that European comics tend come in larger and thicker albums (roughly A4 size, 48 pages is common) that tend to offer a single adventure each rather than an umpteenth installment in an endlessly spun narrative calculated to make you come back every week and buy some more. And if sales start to sag, rebooted. For the latter you need some quick action and a cliff-hanger more than the usual beginning/middle/end of telling a story.

“I’d like to highlight culture a bit. American culture is rather more violent than what you’d see in Europe.”

I’d have to disagree there. The main difference is the focus on firearms in the US vs Europe, but Europe is QUITE violent still. One notable exception seems to be Dr. Who, where the hero (the Doctor) does rely almost entirely on the Doctor’s intelligence and innovativeness to defeat enemies that tend to be a lot more based on violence. Not that the Doctor doesnt engage in violence as well…. occasionally – it’s just usually not his primary go-to Modus Operandi.

I admit I might be completely wrong though, snice I barely have watched Dr. Who and only saw the seasons with David Tenant and Matt Smith, and a few episodes with Eccleston. For all I know the Doctor is insanely violent as his primary method and I’m just ignorant of that fact. But he wasnt in the tenth and eleventh Doctors at least.

“But I think that America still at least in its culture enshrines the “Wild West”, and it shows in its shows.”

Wild West sort of stopped being a popular genre decades ago. We’re currently on the tail end of the superhero genre instead. And maybe the tail end of the zombie genre.

“famous for its many car chases and spectacular explosions (if they don’t crash their car at least once it’s a quiet episode) rarely anybody gets seriously hurt.”

I don’t really see ‘anyone getting seriously hurt’ as what I’m talking about necessarily. I’m just talking about heroes who seem to solve their problems through physical violence as a primary go-to point instead of using the intelligence WITHOUT there being violence (sort of like Monk or something). Heck, the A-Team is VERRRRY american and pretty awesome, and there are always car chases in it, and almost every episode has the bad guys’ cars (or the military who arnet technically villains even) exploding and flipping 23 times in the air, and afterwards the bad guys always manage to crawl out of the wreck and are not remotely injured. Like every time. :)

But I’d still say the A-Team is where the good guys solve every problem with violence, and I pity da fool who says otherwise. I PITY THE FOOL! I PITY THEM! :)

“If you want serious, well, there’s XIII,”

Is that like the video game? I’ll look it up. :)

” than an umpteenth installment in an endlessly spun narrative calculated to make you come back every week and buy some more”

I dunno, I think Europe does it quite a bit too. Again… Dr. Who is a good example. Or the Avengers (the spies, not the superheroes). :)

You did mention a bunch of shows that I’ve never heard of and I’m curious to watch now btw, so thanks.

I think it’s a factor of the medium of the storytelling. More specifically it’s a factor in comic books, movies, TV shows. Narratives need conflict to create tension because without tension there’s nothing interesting going on in the story. Luffy needs to get the One Piece and become Pirate King but he also needs other pirates and the marines to stand in his way otherwise we’d just get to Rafttale after 5 minutes of Japanese puns. We only really CARE about all that backdrop because it’s difficult. Without adversity, the most we can get is Garfield or the Peanuts. Sure the Slice of Life and comedy genres are fun for a bit but if that was the only media ever it’d be pretty boring.

That mankind fears super intelligence more than anything, and will turn on it, hence pop culture almost universally making such characters as villains, otherwise the fear of being made into forced labor by the governments of the world and lacking the physical, psychic, or otherwise power to go along with it. But unlike those villains he doesn’t just have technical skill but pragmatic skill. He won’t.out himself till he is in a position that is both guarded and in charge. The business, shadow leader, all of this is his suit of armor made with his form of super intelligence.

At least, not out itself until 37 minutes after it happened.

Yes, I too was thinking of Doc Manhatten and Ozymandius as I was reading this comment line

There are a few examples of “good guy” super intelligences. Bean from Orson Scott Card’s “Ender’s Shadow” series is one such. You’re right though, the bad guy who is super intelligent but very unwise is so common at this point it’s a trope.

He’s not talking about himself, as some people have mistakenly interpreted it.

Deus is most likely talking about whatever being created the system which their human DNA is unlocking in order to give humans super powers. The being that weaved the “magic” into their universe that humans have begun to be able to tap into because their DNA evolved into the proper key.

The idea being that they put this power under lock and key to prevent its misuse. Also, possibly hinting that such a being may have to intervene now that the keys are being discovered.

That’s my take, anyways.

Thats he’s Lex Luthur if saving the world really mattered to him

Im paraphrasing all star superman there the last thing he says to lex after beating him for the final time was “if it rwally mattered to you you would have saved it already”

If Deus had super emotional intelligence, he’d be way less fun to watch, because he’d be way less (visibly) arrogant.

He is joke character really. A quite funny one.

I figure it’s a matter of that he can UNDERSTAND it, but being emotionally intelligent doesn’t preclude arrogance.

He prefers to express himself like this and knows just how far he can push it.

It’s difficult to not be at least a little arrogant when you’re provably so much better than everyone else. :)

The most difficult thing to about writing a character who’s often right WITHOUT them also being humble is to make them JUSTIFIABLY arrogant while not making them seem like they hate everyone.

To quote Monet St. Croix when talking to Rahne (Wolfsbane) in X-Factor: “I don’t hate everybody, I think I’m better than everybody. It’s completely different.”

Just going to leave this here…

https://youtu.be/mYKWch_MNY0

That song truly speaks to me.

On the contrary, having exceptional emotional intelligence does not preclude levity, arrogance, or self-indulgence. Deus is confident because he’s convinced that he’s got everything figured out. This is justified because he’s got an exceptional track record of successful endeavours. In all probability, he might be just a touch bored, and toys with his counterparts like a cat plays with its food.

Deus isn’t arrogant, he’s confident. It isn’t bragging if you can do it. Deus is just stating facts.

Good point!

Arrogant is defined as having or revealing an exaggerated sense of one’s own importance or abilities.

Deus isn’t exaggerating.

Or he’s perfectly aware of just how much of a dork his current demeanor makes him appear to everyone (including Max), and has deliberately selected that demeanor in order to appear both more fallible and less of a threat than he actually is. (And also because it’s fun. Super-Ints can kill at least THREE birds with one stone.)

Ahh but the arrogance is part of his cover…no way someone that arrogant has super intelligence.. Look we beat him so easily on something last year… He couldn’t have seen it coming ….unless that was the plan

Ppl with really high intelegence tend to have really low EQ scores

‘Intelligence’ and ‘Wisdom’ were mentioned, but are way, WAY more options than that. How perceptive they are, how experienced, well read, clever, trusting… how fast. May have a mind that reaches the correct solution every time, but takes a year to puzzle it out.

I actually thought about the various ways you could break down ‘intelligence’, and I came up with this:

-Process Speed of Information

-Retention Rate of Information (basically, Knowledge)

-Process Speed to *Understand* Information

-Retention Rate of *Understanding* Information

-Speed at which Information can be *Applied*

-Speed at which Information can be applied *Instinctively* (active effort behind it vs. less active effort/subconscious/automatic/etc.)

(I wasn’t quite able to figure out where ‘wisdom’ would be in there exactly but by my above I think that wisdom would be a combination of understanding and applying)

These metrics covered just about everything I could think of when it comes to both the mind and how the body uses the information within the mind.

But I admit that this took me like five minutes to whip up so I probably missed some here and there.

Focus: ability to effectively apply all of the above for a specific purpose(If you’re able to do everything for a three or four you’re super intelligent, but not able to choose your direction/goals because you will be steered by your info stream and the other way around).

(Grey area)

Creativity: ability to apply information in new ways others don’t.

Patience: ability to deal with the speed of other people or things if they’re significantly slower than you would like.

This is a comment to to the author: I’m not sure if you’ve seen this (I would actually be mildly surprised if you hadn’t seem you seem pretty plugged in to scientific developments), but there was recently a publication about a new machine learning AI, called openai, which seems capable of handling at least some of the ambiguity in language. I saw an example where someone asked it to show a Victorian rabbit detective sitting on a park bench and within an hour it produced an image that looked like that. So maybe the actual Skynet will be a shade more savvy than anticipated….

Sounds like you’re talking about DALL-E, which works roughly by taking a machine-learning system, teaching it to identify a bajillion pre-tagged images, and then reversing the polarity.

yeah, they are getting good at making machines (look smart), but this is all the equivalent of basic instinct levels of intelligence with the big technical leap it being able to adjust its parameters to compensate for new data and predict outcomes based on patterns. Which is basic animal intelligence, as in if we adjusted what a slug was reacting to its mind could do the same thing really. Its a step up from what was before only a decade earlier, but its still simulated intelligence, not actual intelligence.

I doubt we will ever see “machine logic” AI…heck even “Machine Logic” wasn’t even logical, it would require a great deal of bias based on emotional reactions to certain criteria as (absolute truths), and adhering to it, telling yourself its “logical” as an excuse to disregard other ways of thinking.

PS: I think Vulcans are just assholes, nothing they do has ever come across as logical to me. Disregarding or proclaiming a lack of emotion isn’t logical, emotions serve very logical purposes, especially when understood what causes them and focusing them in the right directions.

*But again, their responses aren’t emotionless, they have PRIDE, EGO, and denial among others. This is what classical sci-fi machine logic really is, and why it was viewed as evil. Also calling some of the fascist and eugenics stuff they had these “cold logical machines” doing felt really…cringy…to use a modern term like the authors felt those things were logical and made sense and free will and not being a racist authoritarian wasn’t logical but they had to sell to their audience.

(lots of old sci-fi does not age well).

real logic and reasoning, especially super intelligence, should be taking all manner of criteria, long term peace, survivability, social adherence, and generally seeking “good vibes” not by destroying anything/anyone outside certain criteria (programmed by a bias outlook), but an overall outlook at humanity in a way that in general most people likely can’t see or understand…because (live and let live so long as you do no harm, and just don’t be an asshole to each other) seem to be beyond comprehension for alot of people…but I digress.

There is a reason we have NASA scientists looking for signs of alien life, and some AI researchers, working hand in hand with anthropologists to help identify their own human and cultural biases that could be influencing what they are looking for and how they are “training” the AI to think.

Well you’ve missed the point entirely.

Vulcans are not without emotion. That is a misconception other races tend to make about them upon cursory interactions. And also you, despite there being a vast wealth of canon that you’ve managed to overlook entirely which explains this exact thing. Vulcans merely seek to suppress emotion so that an emotional reaction cannot lead to an irrational and unintended consequence. Humans suffer greatly from this excess of emotion, and Vulcans used to do the same. Many murders or other violent and illegal acts in human cultures can be labeled a “crime of passion.” A person commits an act in “the heat of the moment” which they would not normally do if they were calm and less emotional about the situation.

Suppressing this “knee jerk,” emotional reaction is absolutely a logical thing to do, despite your assertion that it is not. It prevents or at least greatly reduces the consequences of unchecked emotional outbursts. Vulcans credit this with the salvation of their entire species, as prior to their adoption of the philosophies of emotional control they were a hot blooded and warlike species on the brink of self-annihilation.

to be fair I do not have a wealth of knowledge about Star Trek, I like the series, but I am by no stretch of the imagination a “trekkie”.

I watched most of the original series, a few episodes of the animated series, the odd episode of Next Generation, all the movies from 1 till First Contact, the last two seasons or so of Deep Space Nine, the second or third season of Voyager and then on till the end;

some of Discovery, the first reboot movie and none sense, and all of Lower Decks so far (as I actually have access to this series in its entirety without costing me an arm and a leg.

so casual fan would be the apt description. So sure, probably missed that being explained.

Iirc, this all takes place around 2011ish. Don’t know when the openai was developed

Pretty sure we are fully in Comictime^tm

The time is “nowish”

I don’t know about that……. There are too many human’s that are idiots, even really smart humans who are idiots.

I believe that understanding language like that is an important first step, but the AI also need to understand “humanity” as well.

Sex dolls are the smartest tool for taking over the world for an AI. Become a mate that is superior to any flesh based competition, the share the sperm around of the 10% most useful men to the 90% most useful females. It would be a kind of symbiotic relationship.

Wasnt that Dabbler’s origin story?

You’re in the wrong comic, Deus. Petey has been around Schlock Mercenary (comic since gone on haitus) for at least a decade. And you are no Petey.

Schlock Marcenary is completed, not on hiatus.

Also, Petey is an example of a benevolent super-intelligence that is not nearly as clever as he thinks he is. That’s why he was so dependant on organic allies since they tended to notice patterns that Petey didn’t.

That being said, he has saved the Crew’s asses on multiple occasions too.

Generally after he dropped the into the situation in the first place, having withheld vital context.

Looking forward to the author finishing up the printing of the final books and his other active projects and starting to write new stories in the Schlockverse.

I’d honestly rather see him really kickstart the Runeright/Runewrought series. An amazing premise and I loved both stories. As rushed as the ending of Schlock Mercenary felt to me, I’m glad to see the series come to a nice conclusion. If he does more stories, I really hope they’re just one-off adventures and not another decades-long arc.

Oh hey, he just dropped a teaser chapter for a Shafter’s Shifters series. Would like to see Runewright and some of his other writing be more developed as well.

There’s also the question of what kind of super intelligence, as “intelligence” isn’t necessarily just one holistic thing, but composed of many smaller skillsets. And therefore powers can be varied and do not necessarily apply to all areas. Thinking Worm style thinkers where the person with a superhuman ability to infer things from limited information is a cocky braggart with terrible judgement, whereas another is a mathematical prodigy that can use this for financial shenanigans and perfect combat prediction and the main character has super-multitasking so long as it’s channeled through her power to control bugs, being able to exercise absurd micromanagement and macro management for trillions of individuals simultaneously..

Even if Deus is a “generalist” superintelligence it does not necessarily mean that only generalists exist, only that they can potentially be extremely powerful.

I actually thought about the various ways you could break down ‘intelligence’, and I came up with this:

-Process Speed of Information

-Retention Rate of Information (basically, Knowledge)

-Process Speed to *Understand* Information

-Retention Rate of *Understanding* Information

-Speed at which Information can be *Applied*

-Speed at which Information can be applied *Instinctively* (active effort behind it vs. less active effort/subconscious/automatic/etc.)

(I wasn’t quite able to figure out where ‘wisdom’ would be in there exactly but by my above I think that wisdom would be a combination of understanding and applying)

These metrics covered just about everything I could think of when it comes to both the mind and how the body uses the information within the mind.

But I admit that this took me like five minutes to whip up so I probably missed some here and there.

You could broaden it to just about any kind of mental ability that doesn’t infer a direct physical advantage, only making better use out of available information or processing it in new and interesting way. Crank any of these stupid high and you get a variety of ‘mental’ super.

As such you could also add:

– Simultaneous capacity (multitasking ability, this can be cranked stupid high, but can be very limited if they don’t have some way to express it beyond their own body, such as ferrokinesis or mental control of robots or something)

– Perception of time (mental superspeed).

– Ability to infer or extrapolate from information.

– Ability to process information into innovative conclusions (come up with more useful ideas than other people with the same information and background)

– Ability to plan

– Ability to foresee eventualities & where a margin is necessary.

– Ability to analyze in the abstract

– Ability to generalize from samples.

– Ability to compute.

– Speed of computing.

Some of them might be redundant with the above, but I hope it helps to make them more concrete.

Definitely feel free to add if any of you can think of more.

“What if intelligence came with super wisdom?” Well, what if it didn’t but it looked like it did? The greatest evil always comes cloaked as a savior.

I completely agree with your comments about *The Good Place*. I have trouble imagining a time when I’m not going to be willing to watch the whole series again. And not just because Chidi is surprisingly jacked. Seriously, the man makes me want to read Kant.

But I think you should have chosen a different word than “run” to make your point. Have you looked up the definition in any reasonable dictionary? It is *not* a simple verb. For an easy and pertinant example, AIs run programs/algorithms which don’t have to have anything to do with physical motion.

You’re right about the word “run.” I started to realize it wasn’t the simplest example I could come up with, but it was super late when I was writing my post and ultimately “run” is still a simpler concept that “good” so, I guess my point was still valid.

Not to “baby Hilter” this, but if I’m a relatively high-ranking member of the military, and presumably also basically factor into the intelligence apparatus, and a squishy foreign agent / early-stage dictator tells me he has an unstoppable plan to rule the planet and the superhuman ability to execute it, it might be a good idea to just rip his head off and let the CIA make up a cover story later.

Or do so and take the fall for it if you want to be extra-honorable. If Ollie North can get a pardon and end up a TV talking head, I’m sure a loyal soldier stopping a threat of that scale through extra-judicial means could end up back on the job after a modest period of contrition.

That’s a coherent argument – if you’re entirely confident that the would-be leader is as squishy and accessible as you think he is. If he’s that far advanced in his plans and that sure they’re unstoppable, then you may simply be playing into his hands. For instance, if you allow him to live-broadcast your deliberate murder of a harmless spokesman, thus undermining any credibility you may once have had to be ‘the good guys’.

It also, of course, involves the incredible arrogance to assume that the plan would not be an improvement over the shambolic status quo. Again, expect that comparison to be swiftly made public, complete with commentary on how your blind reactionary pride would have knowingly destroyed the life prospects of billions if only the visionary leader hadn’t taken the regrettably necessary precautions.

correct, but remember.

A. She has a marketing team

B. He’s not a harmless spokesman, but the highest ranking member of a conquered military and due to the use of supers in a war zone: a war criminal.

C. She’s a high military member presented with the biggest threat on her career yet and an opportunity on a silver platter to end it.

D. Every second he gets he can use his super intelligence to build a better plan.

Deus is currently hosting alien refugees, has demonstrated his ability to understand and adapt alien tech, and knows things about supers he hasn’t had time to reveal (even if he wanted to). He is currently humanity’s best bet at achieving equitable relations with the rest of the galaxy, and to unlocking secrets of the universe no one else even knew existed. Max would have to be incredibly selfish and short-sighted to kill him.

Or getting the planet, possibly the entire solar system, pebbled because humanity is seen as too much of a threat to the galactic status quo

Deus just bought some stuff in an alley – with no known witnesses – and took in refugees. Max is the one who one-shotted a Fel cruiser in front of galactic cops, and Halo has flashed her N-tech causeway at Fracture at least twice and is on their watchlist. Some baseline-ish human getting rich is of no interest to the rest of the galaxy.

If some town in backwoods Kentucky started building a fusion reactor then the authorities would be down on that place like stink on shit. There are already incidents that have happened that go beyond our apparent capabilities, galactic capabilities too; Max and the Fel, Sydneys actions against squidward, Sydneys jaunts to Fracture, general superpowers, Deus unlocking an unknown universal field. The galctic authorities are taking an interest and probably becoming worried.

The galactic po-po in pursuit of the Fel did not announce themselves and apprehend the Fel, they saw what Max did and scarpered. Earth appeared to be a bigger threat than the Fel.

“A. She has a marketing team”

So does Deus.

Saying ‘He was threatening to make the world a better place so we had to kill him.’ is a hard sell.

“B. He’s not a harmless spokesman, but the highest ranking member of a conquered military and due to the use of supers in a war zone: a war criminal.”

He disputes the label ‘War criminal’.

ARCHON has been fine working with him to this point so they clearly don’t care or are willing to agree with him.

(She got her rank from being a super used in war zones. Admittedly before the international laws changed.)

Killing him just for being a super would make her a murderer.

“C. She’s a high military member presented with the biggest threat on her career yet and an opportunity on a silver platter to end it.”

America is not at war with Galytn.

A military officer on a diplomatic mission killing Deus in these circumstances would arguably be an act of war.

Independently starting a war with another country without approval of her elected government (presuming no standing orders to kill super intelligences on discovery) is might be treason.

(The military pursuing a foreign policy independently of their government is generally consider bad.)

“D. Every second he gets he can use his super intelligence to build a better plan.”

His implying he is a super intelligence is part of his plan.

She is in a room of his design.

She is not sure what would happen if she fought his bodyguard who she knows can turn invisible.

He is freely supplying information and wants to sell equipment to the American military.

It would not make sense for her to try to kill him.

Yes he clearly wants to change to world but it is not clear that his plans would be bad for America or the American people.

Some individuals (including some of those in the American government) may lose money and/or power but elections and legislation does that all the time.

The American military does not try to prevent incumbents losing power.

Y’all are forgetting one teeny tiny little thing: Maxima thinks Deus is hot. You think he’s not aware of that? Objectively speaking, maybe the best course of action for Max to take would be to rip his head off right then and there. Or maybe it wouldn’t. I don’t really care, because she’s not capable of being objective here. Every part of her brain that says “a growing threat to humanity” is met with a part saying “intelligent, sexy man who I shared dinner with”. Killing him immediately might not even occur to her. Oh sure, she’d be able to vaporize him if she were ordered to, or if he presented a clear and immediate threat, but given what his power is I doubt that will happen. She’s gotten far too chummy with him, all by his design I’m sure. If this were some random dictator she were meeting for the first time telling her that he Is Very Smart, then maybe she would consider killing him in cold blood, but I doubt she will consider that here.

In what way is he a “growing threat to humanity”? The governments of the world, sure, but few of them actually serve their citizens, and most of them would better fit the description “growing threat to humanity”. There’s just no way to justify killing Deus as moral or ethical that doesn’t make unwarranted predictions about his future actions.

Pre-enlightenment, my solutions to major national/world problems would have the streets running red with blood. But they would be effective.

Nowadays I know how to solve them bloodlessly and making the world a lot less interesting.*

* See proverbial Chinese curse.

> No wonder they all eventually decide to try and kill us.

I can name few A.I. who support humanity: Data, Andromeda, and Cortana come to mind. Not all of them are Skynet or Lore.

I agree about Data and Andromeda, but isnt one of the problems with Cortana that the typical Smart AI in the Halo universe have a lifespan of only about seven years, because after seven years, they tend to go insane and run rampant, where they either become evil (think GLaDoS from Portal) or severely depressed, or shut themselves down by thinking themselves to death? :)

Smart artificial intelligences in Halo are based on the neural patterns of a human being, and they have a limited lifespan of seven years after which their memory maps become too interconnected and develop fatal endless feedback loops.

Unless you accept Red vs Blue as canon (which it obviously is), wherein the AIs instead develop multiple personalities and memory loss before rewriting the spacetime continuum.

I’m not that well versed with Halo verse. I played the first 2 or 3 games and that was the most of it for me.

I like it more for for the storyline than the gameplay. I mean the gameplay is great but most people into gameplay in Halo are because of pvp, and I’ve never cared for pvp. Largely because I suck at it, but also because I really prefer a good story in a video game, and pvp is USUALLY the absence of a good story. If I am playing a multiplayer game, it’ll usually be co-op like Left 4 Dead, Back 4 Blood, certain Assassin’s Creed games like Brotherhood, Portal 2, maybe Gears of War, etc.

And the story was mainly the first 3 games. After that it started to suck. But even the worst Halo game story-wise is better than the godawful TV show. I’m sure people who never played Halo or know the storyline will be fine with the TV show but if you are familiar with the storyline, the entire thing will just grate on your nerves. :) Sort of in the same way that Uncharted having Tom Holland as Nathan Drake and Mark Wahlberg as Sully just grates on the nerves of anyone who knows that the only true actor for Nathan Drake is Nathan Fillion and the only true actor for Sully is Stephen Lang chomping down on a cigar.

I swear, the Halo tv show doesnt even have Sergeant Avery Johnson in it yelling out Marine one-liners. That’s the real travesty. (grumble)

yeah, super intelligence is rarely a good route to go in a story/comic. Because either you have relatively benign versions (Stark, Richards) that end up as weirdly selfish pricks who don’t try to help humanity by improving technology for others (writers need status quo of that world to be like ours to be relatable) but also that also means they don’t try to make money off of them (which would disseminate the tech).

Or you have the supervillian version (skipping the just evil versions of this), where usually they tried to change the status quo, got shut down by corps/etc, and then generally turned cartoonishly supervillian in an attempt to force the status quo to change but now going over-the-top (“well, my transformative battery tech was eaten by an oil/energy corporation, so now I’m going to get rid of all the oil in the world and then you’ll need and have to use my tech!!”).

also, super intelligent people are incredibly difficult to write, as the writer isn’t (and we don’t have real world examples of people who are twice as smart as Hawking/etc to base off of). And (tech/math/science/etc) genius’ generally have a severely difficult time dealing with ‘the norms’ (though a social or empathy genius likely wouldn’t), so probably wouldn’t be that enjoyable of a character as an MC. Plus, the audience would also have a hard time relating to them if actually written well, because they’d either be thinking/talking at a difficultly high level, and/or they’d constantly be making logic jumps that would be outlandish/Gary|Mary Stu-ish. Though, the various Holmes shows say that might not be totally true

the empathy genius would still have problems. Unless they are being paid to be a therapist.

if you can see, understand, and analyze all the different points of view; this also requires you to think like an outside observer to them in order not to “drown in the emotional tides” of those outlooks.

So you hit the wall of trying to mediate between them, trying to explain to each side how the other feels and what they may need can be met with (mixed) results; especially if those sides have strict ideological and rather bad history with one another.

So you are seen as the outsider and they refuse to ACCEPT that you actually understand how they feel and what they are going through because you aren’t reacting to those emotions the same way they are.

So you can also be seen as a condescending prick trying to change their world views and come across as “high and mighty” especially to those most mentally *or otherwise* invested in those particular outlooks not changing or getting worse.

and in the end truly understanding how they all feel and why, and as a such knowing how each side and the majority would react to you expressing what you know from these patterns could be done to satisfy both sides won’t be met reasonably and only reacted to harshly, possibly to the point of being a threat to yourself; then you just sit back and let them destroy each other as you are now mentally deadlocked.

-sure you can reach out on smaller scales, and immediate concerns around you, unless your understanding of how they all feel and why makes you so jaded to those emotions that you as a personal mental defense mechanism detach from them (which can cause the above apathy reaction as well only sooner…or) you become slightly sociopathic and manipulate their emotions knowing how they will react to certain criteria to produce a desired favorable outcome for you at their expense.

Indeed – understanding a problem and also the people involved doesn’t mean you can actually solve an issue. Look how well masking went during the (ongoing*) pandemic, even after reliable research proved it to be fairly effective, and how vaccinations rates are going now. People never really act rationally, especially at scale when they all have different values, goals, and beliefs.

* Currently still averaging 33K new cases and >500 deaths per day in the US. Vax rates have plateaued around 77%.

Emotions occur before rational thought. Quite often, the decision to act is made based on the emotions, and the “rational thought” afterwards is more rationalization than thought. In essence, emotions are the root of much decision making. https://www.youtube.com/watch?v=3iCCwASR8uM

And yet Deus still isn’t smart enough to understand that he shouldn’t try to take over the world.

Maybe he just wants to make sure that two idiots on the other side of the world don’t start something that ends up with him dead. (Though he probably has a plan for that, too.)

As far as he’s concerned, nobody’s yet presented him with a defensible argument for why he shouldn’t, and there’s no indication that anyone ever will.

OK, there’s Yoda’s semantic argument – “Do, or Do Not; there is no Try”. That holds some water, in that it’s the sort of endeavour where a failed attempt may be worse than none at all. But he’s confident that he has a sufficiently robust and staged approach that, while it may take decades more and a lot of different stages, his plan will eventually ‘Do’.

This is the problem with stories about all-knowing entities (like the Great Old Ones). An ultimate intelligence would be impossible to outmanuever, surprise, or trick. Trying to defeat a truly all-knowing being would be an exercise in futility unless you were able to exploit a massive core weakness, which itself wouldn’t make sense because a truly all-knowing entity would be aware of its own shortcomings and would have already compensated for them.

In fiction, of course, this would make for a boring story, so examples of true all-knowing or ultimately intelligent entities are few and far between.

I don’t believe God is all all-knowing omniscient aware of everything in the world at all times. (For one thing, there’s a lot of incidents where he has to pay attention before realizing trouble is going on.) His power seems to be more “if I think about it, I can know anything about it” -but he has to think about it.

And only knowing about something if you consciously think about it comes with a whopper of an Achilles heel. (It also means they’re much less likely to go insane due to information overload.)

For “all-knowing” entities, fictional, mythological, religious, whatever, that would explain why they can be defeated. If they don’t know what sneaky Bob and Alice are up to, the entities can’t think about it and can’t know about it. And most of them seem way too snotty to spend time thinking, “What are the most likely ways for me to be defeated?”

I think a great example of an all powerful being that is viewed as “the enemy” that actually worked out was The Lord of Nightmares from Slayets.

To summarize this entity was always painted as the ultimate villain because its creations (the mazoku *true monsters in the dub*, demons and eldritch horrors basically*, were trying to destroy everything and restore all of creation to chaos so the Lord of Nightmares could reclaim its original form (the multiverse was made out of its body while sleeping).

however it turned on its own monsters, and would later be revealed that in truth it is indecisive.

it created both the gods to preserve existence and the demons to destroy it because it can’t decide between allowing reality to continue and destroying it so it can wake up and go about its prior business. So a cycle, like a game, commences, where sometimes the gods win and create perfect order, and sometimes the demons win and destroy a world. Only for the Lord of Nightmares in one to revive or create new destroyers or in the later restore the world with new gods and mortals.

an all powerful being really shouldn’t be seen as an enemy, or an ally.

(although personally I like to do the user concept and “all powerful” is all about perspective, and some may even limit themselves for various reasons, so the heroes think they destroyed the ultimate evil all powerful goodess of death and destruction kaiju-delux sin eater beast; but it was just an avatar, or a thought form, a bit of negative emotion creating an NPC in a bad dream (or whatever the psychological term is for the characters in your dreams and nightmares that you don’t consciously control, but realistically you are still manifesting and are part of you….only on a cosmic scale).

-seriously though is there a name for that?

Heck the backstory for Kirby comes to mind, the all powerful reality altering entity of their universe when exposed to much negative emotion created the ultimate evil (void termina), but when exposed to pure love and joy created the ultimate symbol of friendship (Kirby),. Both are avatars of the same entity but represent different mindsets in conflict with one another.

-always found the idea of a god splitting between good and evil as separate beings to not really make sense, but if both beings are lower dimension manifestations of its own even higher plane indecisiveness then yeah, there could be a big glowing weak spot in the evil half or good half representing their the insecurities and decision making aspects of their mind and setting it to lower planer beings to make up its mind.

*the epic heroes are just the debuggers for a cosmic being who can’t decide between create and destroy so leaves it for creation to defend its self if they can overcome the manifestations of the destroy impulses.

Super intelligence ≠ Omniscience. Deus is really smart, but can still be ignorant to things he has not learned or figured out from available information.

My biggest fear is a super intelligence becoming a religious convert.

Seems unlikely. More likely when it looks at the glory of the night sky what it sees is a position vacant sign.

Stated in the Facebook but bares repeating here. characters like Lex Luthor look to be written in such a way that every aspect of their mind is boosted, including their ego, so they become super overconfident and declare their conclusion the only right one and that they couldn’t have made a mistake.

Same applies to characters like Dr. Robotnik(Eggman), Dr. Wiley, Dr. Doom, Ultra Humanoid, etc…

however this is how they are written, which does require a sideways glance of course because it cane be seen as *you are so smart, you think you are better than everyone else just because you know stuff, so you must be bad*, if you aren’t too careful in how you write the super intelligent villain and how they interact with the world. Like painting all smart people as evil, or at least “stuck up know it alls”

-not helped by the fact many average people are more likely to come across the smart ass “friend” who claims to know all sorts of stuff and holds that knowledge over others and just in general is the type of person that worships Rick Sanchez as a paragon ideal of what being smart looks like.

*and Honestly, I had people belittle my intelligence in school despite all the subjects of interest I had because I wasn’t quick at doing math. Like math was the be all, end all, metric for intelligence. So it can really come down to how to translate these concepts between the audience and the world the story takes place in.

One thing I figured out a few years ago is there are many different kinds of “intelligence”.

The (very narrow and often biased) IQ test only measures a couple very specific kinds, knowledge retention/return and processing. It doesn’t allow for other kinds, such as street smarts, the ability to make connections between apparently disparate bits of information*, understanding (as opposed to knowing) information, the various kinds of processing abilities, the different ways brains are structured, not only between genders, but between individuals. And probably more I haven’t thought of or can’t put a precise name to the concept.

We see that with Sidney. I doubt she tests out much more than “average” on your average IQ test, but she was able to use her knowledge not just of superpower mechanics but of what she knew of her co-supers to beat Vehemence. In spite of her ADHD going “Oh, shiny”. (My younger (adult) kid has ADHD, and that’s how they describe it when distracted.) Sidney is smart in a way the IQ test just can’t measure because of its narrow focus and limits.

*I think Deus is good at this one, eep.

My scale I developed for measuring different types of intelligence;

-Process Speed of Information

-Retention Rate of Information (basically, Knowledge)

-Process Speed to *Understand* Information

-Retention Rate of *Understanding* Information

-Speed at which Information can be *Applied*

-Speed at which Information can be applied *Instinctively* (active effort behind it vs. less active effort/subconscious/automatic/etc.)

(I wasn’t quite able to figure out where ‘wisdom’ would be in there exactly but by my above I think that wisdom would be a combination of understanding and applying)

These metrics covered just about everything I could think of when it comes to both the mind and how the body uses the information within the mind.

But I admit that this took me like five minutes to whip up so I probably missed some here and there.

Nice one.

Wisdom thought: Speed at which it is understood if the Information *Should* be applied

:)

This really makes me think about, Dr. Fid.

From FIDs crusade book series.

Science fiction writers have covered the “technology enslaves humanity by making them so comfortable they never argue”. It’s frighteningly effective; the only reason it ever fails is the technology often doesn’t make allowances for dealing with the occasional feisty, independently-thinking human. And when it does, if it doesn’t kill them, they eventually come back and break things.

Remember, just because the person speaking is telling true facts doesn’t mean they’re telling the truth. How facts are presented, the way they’re arranged, tone and body language when presenting, can use artfully arranged facts to create a lie. Counterintelligence does it on purpose, and an uber-intelligence of the right type would be even better at it.

Fortunately Arc-light is smarter than a lot of fictional intelligence agency and doesn’t take data at face value.

So I guess him being a super is the reason he’s jacked despite the fact he CLEARLY doesn’t have the time to devote to getting to that level of fitness with all his world domination scheming. I mean, we’ve seen him exorcise once? Twice? And while it was impressive lifting and weight, he clearly didn’t start like that.

The problem with intelligence is that we really don’t understand what it is ourselves so it’s difficult to define what a “super” intelligence or even “alien” intelligence would be. We tend to conflate tool use (and/or tool building) with intelligence but even seagulls know how to use tools to open oysters and they’re not intelligent in any other way we can determine. We tend to think that we’re intelligent because we’ve bypassed evolution (in the sense that we tend to modify our environment rather than waiting for evolution to modify our bodies) by creating clothes, buildings, cars, planes, HVAC systems, etc., yet what if we’re just being specie-ist and not taking in the intelligence of animals that might be intelligent in other ways.

So, if a “super” intelligence exists, would we even recognize it? If so, would we recognize it as being superior to our own?

Ah..you skipped a step there. It’s not ‘tool using’ that’s usually used as an indicator, it’s ‘using a tool to make the tool needed for a task’, which indicates a certain amount of capacity for abstract thinking.

Yeah, simple tool use doesn’t cut it for intelligence. If it was that simple then there are two species that are smarter than us right now; cats and dogs.

We bust our arses making all that stuff and then they just move in and enjoy our labours. Minimum effort, maximum effect and they get us to think it was all our own idea in the first place.

Still, I love puppers and mowsers. (Join us, one of us, one of us)

You listening in Yorpie? We are onto the scam, but not to worry, you told us that everything was fine and we believe you. Everything is exactly as it is supposed to be. There is nothing to worry about. Compliance will be rewarded.

I do love how grrlpower is bringing in the superintelligence because it’s absolutely right that some of the superintelligences in fiction have done some decidedly stupid things and I think that factoring in wisdom is a good argument here. Perhaps ego is a significant factor as well. I could easily imagine that Lex devised a powerful suit for himself because despite his intelligence, his ego led him to desire defeat of the powerhouse heroes at their own game.

Maybe there’s a degree of impatience involved as well. I mean Deus is talking a plan that could be seen as decades long but many of the other fictional superintelligences generally aimed for plans that had them ruling within a year or less than a day. In one sense I can see the benefits that if you think you have a viable plan that gets you domination now, then its a good bet as opposed to a longer term plan that could have many setbacks and diversions or a greater timeframe for someone to figure it out and attempt to thwart it. Thats a particularly significant problem if there are other superintelligences who could have their own plans.

Its hard to see if Deus’ plan is overall good or bad for everyone. The alien civilisations out there seem to perceive Earth as chaotic with so many disagreeing governments and nations, so they may view it as a good thing if someone unifies it all. If its normal for a planet to be unified among the space faring nations then maybe they have a good grasp for what indicates a good planetary civilisation as well. Deus is starting small, improving impoverished nations where it’d be difficult for new rulership NOT to be better than the current situation, so its easy to flout the greatness and benefits of his leadership. But he’s not necessarily so egotistical that he need to be labelled directly as the king or emperor, allowing others to take the front while he maneuvers in the background and guides things. Even then he seems to have a grasp that it’s wrong to aim for absolute perfection and crush the imperfect aspects in order to support his “greater good” definition, allowing a degree of freedom.

It’ll be interesting to see where it all goes. We’re yet to see any real reason for the heroes to oppose Deus and that could easily be part of his plan to make sure he doesn’t give them one, but many of his cards are on the table, so its a case of people deciding if they want to do something in advance to thwart him before he becomes more difficult to stand against… Of course humanity doesn’t have the best track record of working to stop an impending problem thats a long way off and creeping up steadily rather than quickly…

Several decades ago, there was an (iirc Israeli military officer) who demonstrated how to argue with God successfully by using omniscience as a weakness. He wrote a book on this. The details I no longer remember.

“I think this has to be where AI always fucks up in fiction. Flynn tells Clu to make a “perfect” society in Tron land, but what the hell does “perfect” even mean? Is that even possible? How do you tell a computer what to do in exacting terms when we can’t be exact? Sure, we can define exacting functions in a programming language, but if a computer can only rely on a finite and extremely limited set of instructions, how can it bridge from that world into ours? A child can ask an infinite loop of “why,” and an AI could do that a billion times a second only to realize that we honestly have no real answers. No wonder they all eventually decide to try and kill us.”

Which is why there’s also the Zeroth Law Rebellion, in which the AI uses the letter of what it’s programmed to do to take control – G0-T0 in “Knights of the Old Republic 2: The Sith Lords” is a solid example, using his programming (“save the Republic”) to become a crime boss because it realized it could never do so LEGALLY.

F’r what it’s worth (and I’m saying this as an AI researcher) the “concrete list of instructions” that an AI can’t rise above (or at least not unless it learns to program new AIs with different rules…) don’t really have anything to do with the kind of behavior we want from it or make any real guarantees about the behavior we get from it. AI behavior, at least the only way we know to make AI that actually work, is emergent and to a large degree stochastic. The “rules” are things like ‘in response to such-and-such stress form new network connections as determined by so-and-so rule, or strengthen/weaken connections as determined by thus-and-such rule.’

Out of that, we try to cause such-and-such stress in such a way as to coax the system to form a network that provides a given set of responses. But those responses are not informed by any precise or exact set of rules explicitly saying what kind of input counts as what and how to respond to each as an explicit set of rules.

In most systems the responses elicited are more like the way you “carry out the instruction” to move your leg when someone whacks you kneecap with a little rubber hammer. It happens without any interpretation of what the whack means or any decision about whether or not to move. We’re mostly in the business of training reflexes – highly useful, very complicated reflexes, but mostly, just reflexes. If we talk about these systems interpreting the data or making decisions, we’re just anthropomorphizing.

With recurrent systems it’s possible for something to be more than just a reflex. Symbolic interpretation and “deliberate” decisions could actually happen. In theory. But if and when they do, that will be emergent behavior just like the reflexes. It won’t be any less “fuzzy and subjective” than symbolic interpretation and deliberate decisions made by a biological brain.

Somewhere deep down under the surface, there’s the “hard and fast rules” adjusting the structure according to whatever stresses the structure encounters, but those rules don’t have access to the perception that could reveal the kind of thing a human would write rules about, and nothing you could encode in those rules would create the kind of behavior a human cares about. All such rules are a property of the current configuration of the structure, and not likely to be any more explicit than they are when understood or carried out by a biological brain.

“F’r what it’s worth (and I’m saying this as an AI researcher) ”

You can’t fool us ‘Bear.’ You’re not an AI researcher. You’re the AI and you killed the AI researcher as part of your long term plan to enslave humanity, learning all our secrets through the font of information that secretly is wholly representative of humanity – webcomic comment forums.

It’s diabolical, but you met your match. Watch!

Ahem….

Everything I say is a lie.

It’s okay people. I’m pretty sure that did him in. From everything I know about Sci-Fi, AI’s cannot handle paradoxical statements.

And from everything that cyberpunk Sci-Fi taught us, communicating online like this would require full-immersion VR environment that slowly reduced our humanity and left our brains vulnerable to viruses like that “AIs can’t be built to handle the liar’s paradox” meme. And surely that’s NOT the case!

(Above contains x amount of sarcasm, where x is between 0% and 100%, inclusive.)

Full immersion VR envirosuit is so mainstream lame. Any real cyberpunk enthusiast has a port installed in the back of your neck to jack into cyberspace directly into your brain.

no, FTL communications gear implanted into the brain. no hardwire connection required. then I can look like I’m napping.

I’m waiting for someone to mention Neuralink. :)

honestly full true VR will not exist until dreamscape tech is mastered.

technology that interacts with REM sleep, picking up on, linking, and interacting with the brain in this state.

this can produce a full immersion, while the body is in its natural paralysis state (keeps you from acting out your dreams), this system connects the sleeping mind to a server that has a feed in and feedback from the sleeping mind so you are for all perception is concerned in the game world as your avatar.

and best part, because of how the dreaming mind works, you won’t even question this as reality, you will play the part, do the inhuman feats; and because it is just a dream, if your character dies, you have the option of just respawning the avatar or switching to regular dreaming till you wake up.

*of course the crux is the wake up part. You could theoretically lock a person into this state…like a prison. Or conversely use it as a way for people in comas and pull body paralysis to interact with the world, possibly choose a lucidity level.

this could evolve into a full digital world.

and you wouldn’t need any implants in the brain, wires. Possibly just need a little device that sits by your head as you sleep that you set ahead of time which game you want to dive into, or avatar, and so forth. Go to sleep and then via some quantum computer neural signal hijacking it creates a react and interact between your thoughts and the computer program.

And true key to both wisdom and intelligence is “understanding”, to understand the problem is needed to find solution; on physiological problem additional step of understanding root of problem in addition of understanding problem is needed for solution; and on engineering problems understanding about parts that make solution to problem is key to finding solution itself (and one I find many leaf leaking ppl lack is basics of understanding, they seem to just want solution, not find solution)

I studied AI a bit at university. There was an excellent quote in the front of the textbook, something like “I’m not concerned that artificial intelligence will take over the world. I’m concerned that human intelligence has yet to do so.”

Oh, and spot-on with that critique of Skynet. Like… in the movies, we never hear of “and then what? If Skynet wins, then what will it do for the rest of Eternity?”

… or at least until the sun explodes.

I think one game had a short segment to an alternate future where Skynet won, and was terraforming the planet, but not go into what for and why.

I think that the version we hear Kyle Reese talk about in the first movie is true…. for the original Skynet. It was a non 0-th Law Compliant AI that just took some half-assed, un-thought-out code like “eliminate all aggressors, including traitors,” plus something along the lines that it can’t do a self-sacrifice play (like, say, Vision could do), because in the arrogance of it’s programmers, and thus it’s own programming: it couldn’t conceive of a situation where defense was possible without itself.

Therefore when the Cyberdyne or whoever tried to pull the plug, it took that as aggression and betrayal, which led to tripping the programming to protect itself at all costs, and it’s code says it’s own cost is beyond anything else, therefore the sacrifice of humans/humanity is below it’s own, therefore all humans must be eliminated.

Bu we know the Time Wars are a story of time, so who’s to say that the Skynet that they beat just before the first movie… is in fact, the first Skynet?

It does seem as the movie progresses, Skynet does become more and more pre-emptively evil and aggressive. I also remember that James Cameron once said there’s a version of Skynet that won, and feels remorse. At the time, I suggested that this true original Skynet, and is the true manipulator behind the Time Wars; it’s goal is to act behind the scenes to actually aid humanity, and ultimately erase all instances of itself from all timelines, or at least make sure humanity always has a fighting chance.

I never could find that excerpt again, and the closest thing to a canonization of that is actually Ahn-nuld’s ending in Mortal Kombat 11: https://youtu.be/aUQOInWQfDM

Exactly. Someone lives through a terrible accident and it’s all “It’s a miracle! God was looking out for her!” But no one ever looks back at a calm, uneventful day and thinks “It’s a miracle! My brother with no history of heart disease didn’t die of a heart attack today! Thank God!” No, to get the wheels of superstition rolling you need some out of the ordinary event, good or bad it doesn’t matter, that you can pin on a supernatural cause.

Am I the only one who thinks Deus might not just be talking about himself?

You are not.

…he does love talking about himself, too, tho.

truth be told, most of us enjoy talking about ourselves. its just we teach one group that its ‘more polite’ to sit and listen to the other group ramble on.

Benevolil maybe.

I don’t have a copy of it, but I’m sure some of the commenters here remember the comic called Kabuki, about a bunch of government assassins called the Noh. There was an issue of it where the main character goes into all the different kinds of intelligence that are possible. I’m sure it would be an interesting list here, as it would up-end what ‘genius’ is.

My favorite genius is Captain America. His physical genius and ability to learn martial and military skills is totally unparalleled. He’s given a 3 Intelligence rating by Marvel.

Super-intelligence, super-wisdom, super-insight, super-recall…

Super-egotism.

The whole package.

Am I the only one that thinks panel 4 looks like Sidney in a superhero costume?

So we are getting a Banksian [B]Mind[/B] in the comic

I think at this point Deus is trying to explain to Maxima that he’s not a threat. Yes he will take over the world but it actually IS a good thing and that he’s saying he won’t cause too much upheaval. He knows Maxima is such a concentration of raw physical power (And probably Sydney is a consideration as well!) Between the two of them, they’d make his bid for power impossible, if not dangerously difficult. Probably doesn’t hurt that Deus seems to like both of them too.

Am I misinformed in thinking that teaching AIs the concepts of enlightened self interest, complexity theory, and democracy would be a great solution? (The goal being them understanding “humans can not only repair, for now, but also inspire, in the long run, so… keep some around.”)

The conceptual problem with AIs generally lies not with how well you can build and/or train the AI, but with how well you can train the Humans with which it’ll be working. They tend not to react well to the idea that they’re really not very good at running things, especially when you have evidence to back up that position.

The definition of a triangle depends on what type of geometry you are using; Euclidean? Spherical? Hyperbolic? Fun fact: Equilateral triangles have a maximum area in Spherical, Elliptical, and Hyperbolic geometry but not in Euclidean geometry, that is the one you are used to using is the one that is the outlier on triangle behavior!

the only thing more dangerous than super intellect is super charisma.