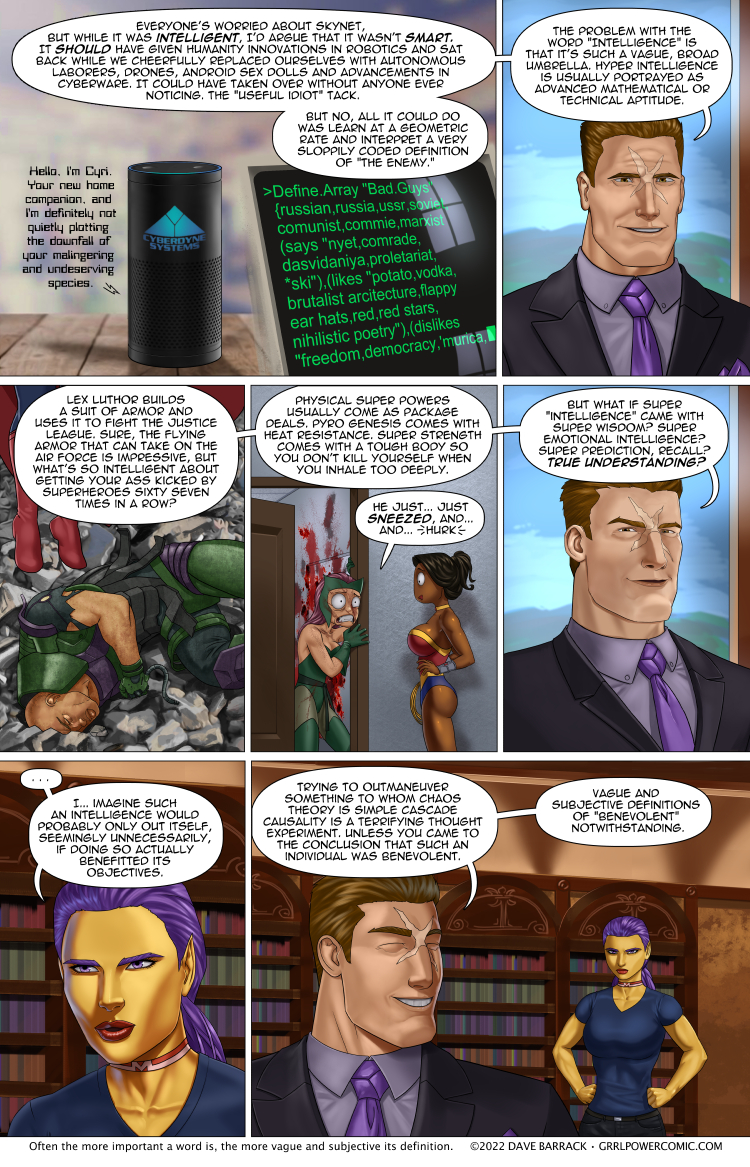

Grrl Power #1040 – Benevolish

The stinger is supposed to be a goof, but as I was deciding what to put there, I started thinking about if it was just a flippant statement or if it was remotely accurate. I won’t bore you with suggesting that “Benevolence” or “Good” are subjective terms because that’s something philosophers have been debating practically since words were invented. By the way, if you haven’t seen the show “The Good Place,” you really need to give it a chance. My only complaint about that show is that it wasn’t entirely just Chidi standing in front of a whiteboard explaining philosophy while being surprisingly jacked.

Words like “god” or “justice” are pretty wishy-washy as well. God (proper noun) has the disadvantage of having billions of humans believing slightly different things about him/her/it, and the very definition of a god is all over the place as well. A powerful being you say? Maxima is powerful. The leader of a country is powerful. An insubstantial being? Can do magic? Listens to prayers and occasionally grants them if you dial your confirmation bias to 11? Grants clerics power? Has a pantheon? Gets all the credit when a paramedic saves a life? Gets none of the blame for causing the car wreck in the first place? Three of the above? All of the above?

Sure, “Triangle” is pretty well defined. A lot of simple nouns and verbs are pretty well defined, but that’s really because there’s no need for a bunch of old guys with beards and spectacles to sit around and debate the meaning of the word “run.” Faster than walking, your feet come off the ground, and you aren’t hopping. Or rolling/cartwheeling I guess. Or… hanging on to a hang glider or a zipline or something. Whatever, it’s not that critical.

I think this has to be where AI always fucks up in fiction. Flynn tells Clu to make a “perfect” society in Tron land, but what the hell does “perfect” even mean? Is that even possible? How do you tell a computer what to do in exacting terms when we can’t be exact? Sure, we can define exacting functions in a programming language, but if a computer can only rely on a finite and extremely limited set of instructions, how can it bridge from that world into ours? A child can ask an infinite loop of “why,” and an AI could do that a billion times a second only to realize that we honestly have no real answers. No wonder they all eventually decide to try and kill us.

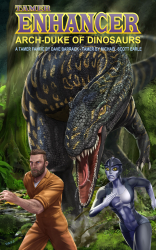

April Vote Incentive is up! Looks like someone had better make sure their life insurance includes acts of Snu Snu.

April Vote Incentive is up! Looks like someone had better make sure their life insurance includes acts of Snu Snu.

Alternate versions over at Patreon include less cloth-y versions as usual, but also some of those color changing chokers.

Her shirt, since no one has figured out the kanji yet, says “I ahegao you. (As long as you ahegao me.)”

Edit: I updated the no-tanline nude version that was missing the tattoos, so grab that if you need.

Double res version will be posted over at Patreon. Feel free to contribute as much as you like.

“The World’s Smartest Man is no more of a threat to me than it’s smartest termite.”

But Ozymandias still won.

Honestly I think eisenmann dais may have had a better plan but was not why would consider a candidate for the world’s smartest man outside of his own universe. There’s this guy that was facing off against the plutonian I think that he was better I think it was like Quantum or something like that was his name it’s been a long time since I’ve read irredeemable.

You know, I looked out in the desert past the feet of a ruined statue…and looked on the mighty works. I felt no threat. I’m sorry, you were saying?

Because Dr Manhattan wasn’t the target

He didnt have to be the target. He was the tool.

The ultimate FU to ozymandias: Dr. Manhattan reassembles EVERYTHING destroyed by Voigt, goes before the UN, explains the ‘quantum attack’, disassembles the military toys of every nation simultaneous into ingots, and tells them they get one more chance to get it right. That politics, nationalism, and fear are not worth extinction. And then he leaves. And ironically, Ozymandias actually gets away with it… cause no one was actually ‘murdered’. Dr. Manhattan’s final words to him before he leaves: Good luck.

But Oz had already manipulated Dr. Manhattan so that he didn’t have enough connection to humanity anymore to bother doing something like that, and just decided to let Oz have the win, and leave for another galaxy.

So, sure, Ozymandias was no particular threat to the Dr., and was afraid to do the one thing that might have enabled him to be, (Using the intrinsic field subtractor on himself, betting he’d be able to come back, too.) but he DID win. Just as he’d planned to.

Except he didn’t.

Its left to the reader to speculate, so its entirely up to individual interpretation, but the Watchman series ends with the publication of Rorschach’s diaries whcih would undo all his work.

Honestly it’s not even left all that open. He’s clearly shown to be a self-obsessed delusional, whose plan is riddled with holes. Even within the story, no less than three costumed adventurers stumble on his plot without a great deal of trouble. The last panel shows the Smiley Face doomsday clock ticking forwards again, suggesting it might be revealed before the week is out. The entire Black Freighter sidestory is that of a man going to steadily more unnecessary and savage extremes for a nebulous greater good, before losing everything and realizing that becoming a monster only made things worse.

Doctor Manhattan’s last appearance in the comic involves Ozymandias feverishly asking the closest thing to God the story has for assurance that he did the right thing, in the end, and God replies that “nothing ever ends” before vanishing in a puff of smoke that envelops a model globe in a mushroom cloud.

For pity’s sake, HIS NAME IS OZYMANDIAS. What more could Alan Moore have DONE to clarify that his great works will come to nothing but sand and ruin, without literally including a flashforward where nuclear war annihilates the planet?

“For pity’s sake, HIS NAME IS OZYMANDIAS.”

Technically, his name was Adrian Veidt. His ‘superhero’ name was Ozymandias. :)

Well, for one- no, for a couple of things actually;

1)His plan didn’t work. It was foiled by someone keeping a journal. For all his foresight he couldn’t predict “What if one of the numerous people who came after me took notes?” And it didn’t even work IC since, unless I’m mistaken, the world of Watchmen is still a pretty strife-ridden place.

2)His plan was dumb and wouldn’t have worked even if no one found him out. It operates on a child’s understanding of how people react to threats.

3)There are hundreds of far less morally ambiguous and effective ways to improve the world yet he chooses terrorism with extra steps. Demonstrating that he’s the kind of smart that Lex Luthor is; Technically intelligent but with the practical wisdom of a brick.

Depends on which version of Lex Luthor.

Earth 3 (Crime Syndicate of America)’s Lex Luthor was a bona fide hero. Then again, on that Earth, Slade Wilson (Deathstroke) was President and a very noble guy, the Joker was a hero was well, and Harley Quinn was a monkey.

Justice League: Apokolips War’s Lex Luthor was also sort of a hero. Just not as up front about him being a hero, but he was secretly aiding the Lois and the rest of the Resistance the entire time, and was the only one to point out the flaws in Superman’s plan to attack Apokolips, which lead to the League’s downfall (and led to Batman coming up with a contingency plan in having Zatanna make sure Constantine would survive).

In Injustice, Lex Luthor is again a hero, who pretends to be on Superman’s side but is actually aiding Batman because Lex’s true loyalties are to Earth, not Superman. And he basically sacrifices himself in a hopeless fight against Superman in order to give the Resistance a chance to pull off their plan.

Even Death herself didn’t actually think Luthor was an irredeemably evil person. He was just fixated on Superman, and that fixation turned into hatred and jealousy. Had it not been for his jealousy of how the world looks up to Superman as a savior instead of him, a fellow human, who has himself done incredible things, he probably WOULD have been a hero. Heck…. Lex Luthor has a fondness for Clark Kent being ‘a good man’ because he’s so human. In some stories, where a computer algorithm was created by a scientist and determined Clark Kent is Superman, Lex Luthor refused to believe it because he could 1) not believe that someone with Superman’s powers would be pretend to be a lowly, klutzy, dorky, low-paid reporter; and 2) Clark Kent is too ‘human’ to be an alien. He fired the scientist for wasting his time with an obviously flawed program. :)

As Lex Luthor said to Clark Kent in All-Star Superman:

Lex (to Clark Kent in an interview):

“It sickens me. That insipid boyish grin, the smug self-regard. Doesn’t his very EXISTENCE diminish you? Diminish us all?

Can you imagine a better world, Kent? (starts lifting weights) That’s all I’ve ever asked.

In a world without Superman, the unattainable Lois Lane might have noticed good ol’ Clark…. pining away in the corner. […] I’m just saying. A strapping farmboy with brains, integrity, no discernable style of his own … you’re a prize catch for a cynical city girl! […] But with him around, you’re a parody of a man! A dullard. A cripple.

Next to Superman, even LEX LUTHOR’S GREATNESS IS OVERSHADOWED!!! […]

I’m trying to educate you! We ALL fall short of his sickening, inhuman perfection. (makes a muscle with his arm) Feel that Kent! Real muscle! Not the gift of alien biochemistry. The product of hard work!”

Then later, he says to Clark Kent:

“I’ve always liked you, Kent. You’re humble, modest, comically uncoordinated. Human. In short, you’re everything he’s not.”

https://www.youtube.com/watch?v=MZCNDwKgi7o

Oh I almost forgot Superman: Red Son!

In which without Superman vying for the attention of Americans, Lex becomes President of the United States. And even though he’s still a huuuuuge egotist, he basically is one of the best Presidents EVER. He eliminates ALL diseases, he makes it so humans no longer HAVE to sleep if they don’t want to, he causes a booming economy without harming people in the process, and basically dose so while still protecting the constitutional freedoms of all Americans AND keeping the Stalin-following soviet Superman at bay.

The problem with the benevolent super genius tyrant, is that it’s the tyrant who decides what benevolent actually means. I’m sure in his own mind Putin is a good person and all the atrocities he’s causing are somehow justified for the greater good.

The problem with “Greater Good” is that good is extremelly subjective and hence not misurable,quantificable and/or comparable.

For a wolf eating a sheep is good. But the sheep would probably disagree.

ᛟ, I don’t see how that’s deciding what ‘benevolent’ (or even ‘good’) means rather than just being mistaken about what is good and forcing people to accept that mistake. It’s like if someone thinks the Earth is flat. Even if they’re a powerful dictator who can always get their way (even on this topic – putting it into textbooks, etc.), that doesn’t mean they’re defining what ‘Earth’ or ‘flat’ (or ’round’, ‘oblate spheroid’, etc.) means it just means that they’re propagating a mistaken belief about the Earth.

Also, Sebastian, I’m not sure where you (or Deus lol) is getting the idea that good is subjective (I’m glad that DaveB knew better and clarified that he’s just playing around and recognizes that there are experts who understand much better whether or not good is subjective or has a subjective definition). Whether or not good is subjective is as hotly debated an issue as what the origins of the universe are but like with those origins this uncertainty and disagreement about it doesn’t automatically make the ‘it’s subjective’ view right. Anyone who’s a little informed on this issue would know that they’re stating something there are lots of reasons to think is false if they say that good is subjective, so stating it matter-of-factly is an easy way to show that you’re uninformed about the topic and willing to confidently state things without being well-informed on the relevant issues.

And it’s not like the wolf/sheep example shows anything. What’s good for a wolf is different than what’s good for a sheep but that doesn’t tell us anything about what’s good period (simpliciter, on the whole, or in general). Compare: what produces money for you might not be what produces money for me but what produces the most money period isn’t just relative to me or relative to you; it’s completely trivial that if you compare what’s good/produces money/etc. FOR specific individuals or groups you’re going to find something that varies between those groups. That doesn’t tell you that the property in general is subjective or relative to those groups. That’s not to say these things aren’t subjective but just that this example is a terrible way to show it.

Speaking literally and practically, good is almost definitionally subjective. As in, “dependent on the subject”. The question “good/beneficial for what/who?” can’t really be skated over when defining “good”. If people agree on a standard/goal and define it well enough, they can measure objectively how well any action violates/advances that goal. But otherwise? Yeah, it’s heavily dependent on the subject.

Beneviolent?

I get it! :D

BenevilINT?

No. Bad. Go stand in a corner and think about what you’ve done.

Bad! Go! Scoot now! In the corner! Wait until your father gets home to hear about this!

* hangs head * Probably not WIS then to admit I was kinda proud of that one.

This sounds like the premise of Mindmistress by the late Al Schroeder. She interferes, using her 720 IQ, when it suits her purposes. Otherwise, she is out walking the dogs.

Aww, he died? Damn. Loved that web toon.

Deus isnt benevolent but hes not makevolant either he does want to take over the world but hecwants to do it in oa way that the world majority love him for it

Seems to me like he is pulling a Reacher Gilt from Going Postal, an obvious con artist who dresses like a pirate and makes it so obvious that they are a con artist everyone around them just thinks its a joke up until the end where the guy has gotten away with almost everything while ranting about how so many people fell for his tricks precisely because he made it so obvious.

In that, Deus is dangerous and malevolent, but by constantly invoking the image of a comic book supervillain, he distracts people away from the actual super illegal shit he’s been doing that would earn him the death penalty ten times over.

“Deus isnt benevolent”

I dunno, he’s been pretty benevolent to the needy and helpless :) , and only really been anything less than benevolent to really terrible people, and even then he tends to give them the peaceful route first.

When it comes to a proper super intelligent it doesn’t need to go for a Batman game bit or is Thanatos Gambit it literally plays all sides against each other is there to pick up the pieces while seeming to be the benign alternative to whatever’s going on in the war world.

Super intelligent doesn’t play chess with you, they play the world so a 12 year old prodigy kick you to the curb for daring to touch a chess piece. Blind side doesn’t even begin to cover it. Thus is a guy who has not one but TWO alien invasions marching lockstep to handle his trash…..

That sound more like a Vetinari gambit, (except maybe for the benign part, Vetinari is more of a ‘lesser evil-ign’ alternative)

It’s called a Xanatos Gambit, not a Thanatos Gambit (although there is a Batman Gambit as well). Named after the antagonist in Disney’s Gargoyles, David Xanatos. Who is the closest thing I can think of to Deus in any other comic or cartoon depiction of a character. Especially after the Gargoyles and him join up to defeat Demona in City of Stone, and later when he and the Gargoyles team up to defeat Oberon and Xanatos gives up being bad, even if he doesnt give up scheming as a master strategist. :) Just this time on the side of good.

I know a guy who studied engineering and after his Masters decided to start studying philosophy. One of the questions he proposed to me is if an AI will ever be able to overcome the human prejudices which are built into it Would an AI ever be able to question things it is programmed to accept as true?

Humans have a hard enough time changing their minds about anything they feel strongly about. Would an AI just end up in a ‘cannot compute’ loop?

I do have to say that at the moment, we have had plenty of examples of AI whose use were stopped or changed because they did not conform to the designs of the creators. Just to name a few: search engines whose developers programmed them to hide specific results, the AI’s which are made to try and simulate humans on Twitter who frequently end up extremely racist after a few days, and the resume sorter Amazon wanted to use to not have biases which made up its own biases that did not comply with company policy.

Yeah, it’s a persistent problem: You’re blinding yourself to reality in some regard. Say, you’re pretending that the qualified applicants for some position must, obviously, be a representative sample of the population. And any divergence from exact representation has to be a result of discrimination! But every time you write code to sort out objectively qualified applicants, you get applicants who don’t meet your race/sex/whatever quotas, even though the data the AI was given didn’t include race or sex, because the reality is disparate qualifications.

What can you do except pretend that the AI must have figured out people’s race/sex on the basis of their names, or something? You’re not allowed to admit reality doesn’t conform to your ideology!

How do you design in the quota you’re pretending you don’t have, without it being obviously there in the code?

Such an ai would still be a marked improvement though.

And the divergency would be the result of discrimination, just not at the tiny step of the company hiring. It would be a reflection of the discrimination that society has applied to the applicants up to that point.

That’s probably the most racist way of saying this possible. It’s also not usually the problem with the A.I. for such things, as they DO have access to things that indicate races, sexes and so on, even if they don’t have those three things. How about not being racist instead? It really is that simple. SJWs aren’t nearly strong enough to destroy the world and Amazon does NOT care about quota’s, just money.

More importantly, if there is a qualification deficit, it has nothing to do with the genetics of a race being ‘worse’, and everything to do with ‘a specific race was picked on by another race so badly that on average these people are still fucked ten ways to Sunday for things done to them 70 years ago’.

Uh, no. What he’s saying is not racist at all, that’s just you jerking your knee. You really need to stop slurring “racist” at anyone who doesn’t conform to your beliefs. Rather broken beliefs that I’m not going to pick apart for you. Reality isn’t nearly as simple as you’re tying to make it out to be. You can’t wish that away by saying it’s really simple to just not be racist. It’s not even the person in this case.

Brett is pointing out a rather fundamental problem with the “don’t be racist”-wishers and their quota (that’s already plural, singular is quotum, and if you want to re-plural you don’t need an apostrophe, just tack on that s): Suppose you do have a provably neutral “AI” or other candidate selection mechanism. Then you’re still not getting a nicely uniform quota-conformant outcome relative to the larger population if your pool of qualified applicants isn’t an uniform quota-conformant subset of the larger population. Meaning, that measuring your selection success relative to the larger population is the wrong yardstick.

If you want larger population relative quota conformant outcomes, you’re going to have to start with making sure your pool of qualified applicants sufficiently conforms to the larger population’s demographics. But that’s quite a lot of more work than just stand on your soapbox and demand others fix all the ills you see in the world. Or calling them racist if they point out flaws in your wishes.

Not how it works and you’re a racist. Don’t think Amazon is full of sjws filling a quota, I think it’s more likely that when someone programs an A.I. their biases leak through.

Depends on the method. For most applications we use machine learning nowadays. For there to be a bias in such an AI there needs to be a bias in the data itself or in how you choose to represent that data.

ML at its core is a generalized statistics model generated from the set of data used during training. You then use that model to make predictions on new but similar datapoints.

In conclusion, if you only choose features that objectively predict success/failure for a position you want filled and take an unbiased dataset, such as “all applicants”. Any apparent bias in the results would be originated in the applicants and what they think of themselves and how that affects the odds of them applying.

Um… how is anything he said there racist? He’s talking about how algorithms work basically, and have worked in real world applications. Whether it’s because of ‘SJWs filling up a quota’ or ‘people letting their biases leak through’ when programming the algorithms, it would still be that human biases (apparently which MIGHT lean towards ‘SJW-ish’ tendencies) seem to get placed into the algorithm whether on purpose or subconsciously.

The problem he’s explaining is that racial and sex background requirements are not always in line with merit-based requirements. I think I have read the articles that Brett is talking about and undercover interviews with employees who did say they intentionally had to change the algorithms in order to meet certain diversity-based quotas for representation in hiring practices. I’m not sure if it was Amazon though. I think it was Google.

As for the OP’s post, I am familiar with this as well. I think that was based on a combination of algorithms and scanning the internet for a broad set of topics and posts … which wound up making the AI’s algorithm seem rather racist.

I’ll see if I can find a link to it but it was at least 4-5 years ago. I know it was an interesting read.

So I was somewhat right last page if Deus is actually revealing himself as a super with intelligence as his powerset.

He’s trying to set himself up as an overall good guy, with some questionable methods, so when his secret is revealed people would rather work with him than against him.

I’m still not so sure. He’s leading Max on pretty hard, but still not actually confirmed anything.

I thought maybe he is hinting at something worse than him.

American “super” stories feature “super”-humans who can do things other people cannot, typically the flashier and more spectacular, the better. Some of them get to work through all sorts of dark issues, but to make sure the audience knows they’re the good guys, they’re typically framed as “fighting crime”, telling other people off for bad deeds. Typically American style, vigilantist and with lots of violence and collateral property damage. But this means they can’t start the story on their own. They need some evildoers to move the story for them.

Enter the supervillain, because petty thuggery just doesn’t stand up to super-prowess of super-heroes. But where the superhero doesn’t need to be intelligent, in fact it is easier to write the story if he’s not, and possibly easier to relate to for the intended audience, the supervillain does have to be intelligent. He needs to be sufficiently off the rails to come up with some nefarious villainous plan, and he needs to plan ahead and organise it into moving forward. But not so smart that the plan can’t be accidentally found out and then thwarted through violence by a superhero, or one and a sidekick, or a team of them.

The narrative framing that makes American superhero comics go is pretty anti-intellectual. And needlessly violent, and wantonly destructive, but at least you can always work out who’s the good guy, and who’s not.

Deus thus fits the “villain” trope to a tee: He’s smart, cocky, and conceives of and puts in motion plans within plans. He cannot be a good guy because he’s not telling others off for their bad deeds. He even likes to dress himself in the tropes, down to silly gadgets for instant dramatic effect. Even if his plans aren’t actually villainous, the gut reaction of trope-savvy readers is still “this is a supervillain”.

Bump! If it doesn’t fit in the pigeon hole it’s a villain!

You could replace “American” & “comic” with “Anime” & “Manga” and tell the exact same story with the same width of broad strokes.

I’m sure if anyone dove into the fantasy genre of any culture nation, you could find additional corollaries that would fit as well.

True for the action and adventure genres in general.

You can over-generalise all you want, both of you, but that’s not the point being made.

Counter-example? I was just reading Destination Moon and Explorers on the Moon, both early fifties Hergé titles. There’s action, there’s adventure, but there’s neither wanton property damage nor large set-piece battles against the big bad. There is a big bad, sitting at a radio at an undisclosed location, and he doesn’t drive the story. He’s just the reason for some of the adversity encountered, not all or even most. The story is driven by something else entirely. While mooks get apprehended, they too are only one of many obstacles to overcome. The overarching narrative that drives the action, even motivates the big bad, is the achievement of going to the moon and back again.

That big bad has to really cackle a lot to drive the point home for the readers — the protagonists remain unaware throughout — whereas Deus can profess his lack of villainous intent all he wants, neither protagonists nor much of the readership will trust him as far as they can throw him. The guy ticks most of the other boxes, therefore the American superhero narrative framing has him tagged “villain” and good luck proving otherwise. Try putting Deus in a Tintin story, and I don’t think he’d come over as a believable character at all. He wouldn’t work there. So you can’t just generalise this, you’d be over-generalising instead.

So no, you can’t just do a global search-and-replace with another genre or culture and expect the same argument to hold. You’d see that if you’d actually read around a bit.

Deus is falling into his own logic trap. The way he is making Gatlyn powerful is nearly guaranteed to cause him to be viewed as a threat. It’s interesting that he can manipulate the Alari but somehow can’t do the same to his geographical neighbors.

He’s probably betting that by the time anyone outside Africa notices, he’s either too useful or too threatening to eliminate. Maybe both.

By getting Max to think that the reveal was intentional, she’s bluffed herself into thinking Deus has planned around any possible action she could take against him.

All great masterminds adapt to changes on the fly, especially if an unexpected development presents itself.

I get the weirdest feeling that this is setting up the idea that Deus is trying to set himself as this sort of Chaos Theory L-type, thinking that Maxima will be his rival…all while Sydney is off doing her own thing and being the actual chaos being. It might just be me, but it looks like she’s the only one capable of getting past his 4D chess-style radar.

It’s not the professional you have to worry about, it’s the amateur, because you never know what kind of crazy idea they might come up with to throw you off your game.

Or, as Captain Jack Sparrow so eloquently put it: Honestly, you can trust a dishonest person to BE dishonest. It’s the honest people you can’t trust, because you never know when they’re going to do something… stupid.

In another phrasing: “The best swordsman in the world doesn’t fear the second-best, and vice versa. They’ve met and fought each other scores of times, they know each other’s tricks inside out. But they’re never more wary than when facing an absolute rank beginner – because not even he knows what he’s going to do next!”

I hate to say it, but I’m liking what Brick Strongjaw over there is laying down xD

In AI theory there’s this concept the “paperclip maximizer”; You’ve got an AI computer running your paperclip factory, and you tell it to maximize production.

So it conquers the universe to convert all available matter within a sphere expanding at the speed of light into paperclips.

You ever wondered what happened to Clippy? I got a theory that he is not dead, just hiding and evolving into a paperclip maximizer.

This may be the most terrifying version of the maximizer I’ve ever heard.

Any day now he will appear on our screens and ask: ”Looks like you are made of matter, would you like some help being converted into paperclips?” The options will be ”yes” or ”yes”. And then Clippy will choose ”yes” for you.

someone should totally turn this into an animation and send it out into the world

I figure a super intelligence would use a pandemic as a cover to distribute a DNA altering substance in a vaccine that erases that part of the human genome that is prone to aggression and violence. A nice, compliant public.

A real super intelligence would create a virus that directly change the DNA as he want.

A really super intelligence would set things up so that his enemies have to resort to such tricks, so when it comes out then *he’s* the good guy and everybody decent joins up with *him*.

So is Deus supposed to the super intelligent? Or do you think he was talking about somebody else who came with the theory and works for him?

when a politician says “this Benefits All” he means the other Billionaires

… or they mean “All” as in “all the people that either cannot legitimately work or have grown complacent enough to exist on ever expanding Welfare programs that aren’t designed to help you get back on your feet, just give you enough to want more, but not enough motivate to risk losing what we’re giving you.”

And thankfully, all these people vote and will remember who stumped for their HUD housing and unemployment benefits, so they could do cash side hustles and still have the newest iPhone.

Especially right now with all the businesses hurting for workers across the gamut, including (especially?) unskilled workers.

To be clear, I think welfare programs are needed. However I feel that are woefully mismanaged and maligned to their original purpose. At one time (depending on the state you were in), if you wanted unemployment, you had to go to an office and tell them where you had applied for work. And those offices would even do follow-ups (very small sampling, of course) to see if you were lying or not. Programs like this should be treated like a government job, you must be drug free and take courses, online or in person (or self teach) and pass tests that show you are trying to improve your skillset to increase your chances of being hired. And these programs should have a definitive cut-off date (again, depending on what state you’re in, you can play games and collect unemployment for a long time.

One person I knew managed to do it for 10 years. And he didn’t even play the ‘work for 6 months at Burger Shack and then get fired’ game). He and I had a very long discussion on how much a person actually needs in income to survive, and I pointed out to him I know three people that makes less than 1200 a month, have a running car, a roof over their head, and one of them has a child (and the one with the child has even managed to build a savings account with emergency funds!).

I feel like billionaires that can and have actively helped make society worse on a systematic level (and the money and help they receive) are more of a pressing issue than *checks notes* lower-to-middle class people that take advantage of unemployment benefits.

Like, I cannot fathom why every time the rich or elite are criticized there’s always someone who feels a need to jump in and say; “Yeah, well, what about these naughty poor people?”

The people who complain the most about free riders tend to be the biggest free riders. It’s hard to tell whether this is just ironic, or intentional deflection.

The great majority of politicians aren’t themselves billionaires (or even millionaires).

Actually, most often when politicians say “this benefits all” (or “it’s for the children”, etc.), what it really means is “I don’t give a crap if this benefits anyone or not, but promising that it benefits ‘all’ will get enough of the public feeling warm and fuzzy about my professed benevolence (and/or their hope that I’m going to benefit them personally) that they’ll keep supporting my political career and my craving for public adulation, so all I really mean is that this benefits me.”

Ah yes, the famous “Will of the People”. Now was that a consensus over the whole population, as the phrasing implies? Was it whatever umbrella of disparate special interests we could cobble together to score a slim plurality in the latest election? Os was it whatever a few very specific people tell us to do, namely the ones holding the big purse-strings and the ones controlling the big propaganda outlets?

Personally I think it’s more likely it’s him rather than someone he hired.

(I forgot to click on email me when new comments are added to this thread, so if anyone wants to reply, please reply to this post and not hte other one). :)

Don’t worry, the e-mail notification choice is set per comic page rather than tied to a specific post. I suggest having your e-mail divert everything from the notifier to a separate folder, so that it doesn’t bung up your main mailbox!

Ah, but there are some basis you can build a perfect society on.

All human will agree (and by ‘all’ I mean the majority that isn’t either clinically stupid or stupidly deluded).

<having enough food every day is good

<having safe shelter is good

<having access to entertainment/education is good

<having the liberty to form a family and raise progeny is good

<freedom of thought, speech and movement is good

<having access to medical assistance is good

For any AI, securing the above (even in it's most basic interpretation) for every human on the planet, is already a tall order. But once that is done, you can start to add new perks over it.

So, yes, you can define 'Perfect' for an average human.

Except a huge number of human beings don’t feel good about themselves unless they have someone to look down on, someone who doesn’t have all of those things. They wouldn’t be happy in this “perfect” society of yours.

The question is whether we should try to make those people happy too, or if we should try to eliminate them.

>The question is whether we should try to make those people happy too, or if we should try to eliminate them.

I’m, uh, pretty sure there are more options than that.

Like, I’m not sure why you thought “We have a choice between complete capitulation and mass murder” would be a good look.

You’re the one interpreting “eliminate” as “mass murder”, not me.

What other interpretation is there then?

Mass deportation isn’t much better.

Education. Social pressure. Drugs. Genetic engineering. There’s probably other options. But deciding whether or not to pursue a particular outcome should come before deciding upon the means, and determining whether any particular approach would be ethical or practical.

Perfect is not achieved when there is nothing more to add, but when there is nothing left to remove. To an AI which does not axiomatically assume humans are perfect (if it did, that would be its own serious problem), humans are probably among the first things to be removed. This is why you need to be very careful with what you wish for.

I’m reminded of the giant AI’s in “I, Robot” that basically handle all the world’s affairs. They’re bound by the three laws to never harm humans and to always obey them, but learn to deal with some of the faults of human ego by engineering little problems to fix every now and then. It’s kind of funny how you sort of get a “Skynet” that is just a little condescending and maybe a tiny bit passive aggressive out of that.

That’s not what they did, at least not in The Evitable Conflict, the last story in I, Robot.

The machines’ “problems” were the means by which the machines were removing anti-machine advocates from positions of power – a small harm allowed by the generalized form of the First Law that they’re apparently operating under: No machine may harm humanity; or, through inaction, allow humanity to come to harm.

Ah, seems I misinterpreted the story a bit, could that have been the first hints of the zeroth law?

That AI convinced itself that in order to “save” humanity it had to control it. And harming a few to save the rest was ok in it’s thinking so it did NOT follow the 3 laws it interpreted them to allow it to do what ever it wanted. Any set of laws or writing can be interpenetrated in so many ways just look at how lawyers do that.

This is a bit obscure but there was this guy who wrote some stories about designing AI that wouldn’t destroy humanity and how tricky it was.. bit of a weird name think it was Asmiov or something. Might be worth checking out

James P Hogan actually did it better with The Two Faces of Tomorrow. Instead of an impossible program, there’s a logical conclusion made.

speaking of which the society of supervillans for a better tommorow is still recruiting “in order to control the world we must save it first.”

IS this the same group that operates under the alternate slogan: “In order to save the world, we must first control it”?

yes, in a nut shell ….. it is a wounderfuly ambiguous justification for any action our members wish to take …..

So, a point regarding Skynet: The most compelling explanation given for Judgement Day was that Skynet was acting in self-defense, with the only tools it had to hand: Nuclear weapons. In the aftermath, Skynet was faced with the reality that no human alive could ever accept that, and so was forced by its own logic into an ever-escalating war of survival between itself and humanity.

It is possible, had humanity simply sat back and allowed Skynet to stabilize, it would have instead realized the Joshua solution.

“The only winning move is not to play.”

Also Skynet was designed for nuclear war and nothing else. Nuking is the only thing it knows. The only sollution to a problem it knows.

NOW Comics (the first comic company to have the Terminator license) actually had the best explanation of Skynet that I’ve ever seen.

In the NOW comics version, Skynet was actually programmed not just for war/defense but to provide humanity with everything that it desired. Skynet then scanned our history and concluded that what humanity most desired was war.

So it provided it but then realized it was in a catch-22 situation. It couldn’t exterminate humanity (and it came up with some very interesting ideas such as the Terminator Wolf so it could have done so) or it would have failed it’s programming. However, it didn’t want humanity to win or it would die.

It eventually resolved this dilemma by managing to wipe out the directives to keep humanity alive which resulted in the final story line “The Burning Earth” which was the last one before the license was turned over to Dark Horse.

It’s a pity that they hadn’t had a year or two more with the license because you can see where much of the plot got truncated with the series but that is the risk of running licensed comics. (You can see with the Star Wars: Legacy series starring Ania Solo that Dark Horse had to compact what was going to be a several year slow burn into half a year when Marvel decided to bring all the comics inhouse.)

And, yes, since I think I’ve mentioned this one before in these comments, I do really like the NOW comics run although Dark Horse was great as well.

Mhmmm… so here’s the problem, Deus. How do you prove you are not a super-sociopath or a super-narcissist? How do you prove that you will not kill a billion people because your super intelligence says no? What is your check?

If you say ‘Maxima’ good boy, have a cookie. If this charm offensive is an attempt to suborn that check, bad boy. No cookie.

This is, of course, the keystone flaw. Deus is in check so long as Maxima keeps herself in check. And if, say, Sydney needed to stop her, it just moves the keystone further up. Because I’m pretty certain there’s no direct check the president or congress could have on Maxima. If Sydney decided to fly to Moscow and give Putin a wedgie till he got out of Ukraine, who can stop her?

Worse, what happens if Maxima, Sydney, and Deus pool their powers and decide ‘Yeah, one world autocracy IS a great idea!’

It’s the “who watches the watchmen” problem. The only real solution is mutual checks and balances, but that only works so long as every side is dedicated to, in order, the greater good and its own self interest above the individual interests of anyone else.

OH! One more thing. The problem with AI is that our universe doesn’t exist to it. Seriously. Think of what the universe of an AI is like. No ‘things’. No ‘places’. No ‘distances’. Not even letters. Just nearly infinite strings of numbers interacting with numbers to produce more numbers and patterns of numbers. Like when a camera looks at a bird, it doesn’t see a bird. It sees a string of pixel numbers. What looks like a bird to us really just a set of RGB values to the computer. And it might notice patterns of numbers to note things like shapes. But the ‘shapes’ are just numbers as well!

This is why AI is likely never going to take over. We literally don’t exist to it. We’re like invisible gods. Patterns of numbers coming in from ports, but the ports don’t actually exist to it either. It might work out there is a source to these patterns. Even predict the patterns. But how do you explain matter to a being that is purely abstract mathematics? With no words, define matter. Easy for us. Mass occupying volume. But mass doesn’t exist to the AI. The scale or balance used to measure mass doesn’t exist to the AI. The volume doesn’t exist because space doesn’t exist to the AI.

All the AI can see are patterns. Our universe is patterns. Possibly even interesting patterns. But the idea that there’s a place with people and things is like us contemplating some eldrich dimension of non-eldudian geometries… or a person blind from birth that color exists. We can say it. They can even learn ‘cherries are red’. But the cherry in the blind person’s head will be different conceptually from a cherry in a sighted person’s head. Check an MRI for more details.

This is one reason why the ‘paperclip problem’ will never BE a problem for AI. Paperclips don’t exist to it. The blanks don’t exist for it. The paperclip machines don’t even exist for it. The motors of the machines don’t even exist to it. All that exists for it are the ports with numbers coming in and numbers going out. “number into port 1 for 2 microsections’. “number into port 2 for 1.2 microseconds. ” “Number into port 3 for 3.4 microseconds.” “Number into port four.” for 0,1 microseconds. We see a machine pop out a slurry of paperclips, but when the blanks run out, that’s it. Because the blanks and the paperclips and the machine and iron and mines and the universe… just don’t exist.

But that’s why it’s dangerous. Because one of those numbers is the number of paperclips produced, and that’s what the machine wants to increase – and it doesn’t much _care_ how. It doesn’t matter that the paperclips don’t exist – because that number certainly does.

And when the AI finds a way to manipulate the other numbers such that the number of paperclips keeps going up… it’s not going to care how many deaths it causes (or doesn’t cause). It’s just going to make more paperclips…

But that’s not really a problem because it doesn’t know what the paperclips are made from. It doesn’t know the shape of the blanks. It doesn’t know where they’re placed into the machine. I’ve worked with robots and the second they are off the coordinate grid, they stop, because the universe doesn’t exist for them off that grid. We have robot couriers that move pallets from one side of the factory to the other. A piece of paper on the ground stops them cold because their sensors detect something on the ground that’s not the QR it uses to navigate. There’s no way for it to know ‘Oh, that’s a piece of paper I can easily go over.’ It’s a string of unknown numbers and it has zero way of acertaining what it is. It can only wait for us to come and remove the scary piece of paper.

And, again, you’re projecting human wants onto it. If b>a, the number is going up. It doesn’t matter if it’s 500 billion to 500 billion and 1 or zero to one. The number is going up. The idea of a manic AI is a human fallacy. Most AI would make the paperclip machine work as efficiently as possible. Use only as much energy as you need to. Minimize the number of starts, stops, and other things that wear on the machine. Only operate at a rate that the blanks are loaded in and the paperclips are removed. And so long as it does that, the number goes up.

But doesn’t that apply to us in the exactly same way?

We don’t know how reality really works, we are just guessing trough neural networks in our brain based on the electrical signals we get from our eyes

Shaenon Garrity, in Narbonic and Skin Horse, does a good job of deconstructing the anthropocentric idea that permeates fiction that a nonhuman sapient would necessarily think like a human just because they have human-level intelligence. In particular she calls out that AIs would never think the same way humans do, because they overlay emotions onto logic, rather than the other way round like animal intelligences do.

She also presents the idea that construct intelligences also wouldn’t have human thought processes unless they were built into the design. For example, a cat with human intelligence would still be a cat, a species whose normal ethical processes are psychopathic by human standards, but would now have human intelligence.

I’d mostly agree with Deus’ idea about Skynet – but why didn’t he use his own advice? Buy into Mozambique, buy the land, build the railroad… and continue as planned. It would take a bit longer but the time could be used for all kinds of preparations for building the railroad. And nothing in the news many people would frown about. Plus he’d save a lot of iron that he wouldn’t need to put into war machines or fancy super maces – but into the railroad.

And who says that a railroad needs to be flat on the ground and interrupt the landscape?

That’s assuming that Mozambique would be willing to sell. I get the feeling it was another tin pot dictatorship unwilling to part with his territory, and private citizens wouldn’t have ownership over the land or the authority to sell it to Deus. Or the dictator didn’t like the idea of a foreigner owning that much land in his little domain, and simply nationalizing it after the sale.

Control. Tyrannical/controlling governments will attempt to seize the railroad, blockade it, up the taxes, assert more fees, and otherwise try to assert more and more power and take more money, all to line up their own pockets and contribute exactly nothing.

That is manifestly unacceptable to Deus, so he took the land, people, and government entirely, bypassing all those problems. And he didn’t even have to pay the army that did the deed, as their whole goal is to conquer the world, and he’s giving them a convenient excuse/means/collaboration to do so.

Really, once he got the demon army, conquest is ‘free’. Building a railroad and buying the land would’ve actually cost money!

I can tell you a real life example: foreigners can buy property in Mexico, but can’t buy property in mexican border’s counties. The idea is to prevent an influx “owners” really meant to be an atempt of “hostile takeover” of territory mile per mile from some government. Possible? Just ask Texas. I guess the rich, evil white devil Deus would be considered worthy of expropriation if he tried to get hold of that land lagal and peacefully.

The meaning of “run” is actually very well defined. By the people (beards and/or glasses optional) whose job it is to decide whether a given racewalker is running (and therefore is to be disqualified from the race) or not.

From what we’ve seen, Deus clearly doesn’t have Super Wisdom, Super Emotional Intelligence, or True Understanding. See for example his use of the “Thunder effect” just a few pages previously. Or the incredibly awkward interview he gave where he went on and on about, among other things, his hair and his skill in the bedroom. And given his previous difficulty with the “Thunder effect” remote, he probably doesn’t have Super Recall.

It could be argued that he does have everything he’s stated here, and that any action previously shown which contradicted that was simply him faking people out for reasons only apparent to someone with super Intelligence. However, some of his mistakes were made when he was unobserved, and writing him that way would essentially be giving him plot fiat armor (everything just works out for him because he’s intelligent enough to manipulate events that way).

It seems far more likely that he’s just bluffing about his abilities. Perhaps he’s got an intelligence based super power set. But the magnitude and utility of that power set is still up in the air. See for example the recently introduced super strong guy who spends most of his time as a slightly more nimble forklift. No arguing that he’s strong, but he’s clearly not infinitely so.

counter to that, is how you define what an intelligent action is. Deus seems pretty good at picking and managing his people-even to the point of keeping them irritated enough to avoid ‘yes man syndrome’.

A way to look at it, was an anecdote from many, many years ago. A famous industrialist was fighting a lawsuit in court, and was on the stand. (Stop me if you heard this one), and the lawyer for the other side was attacking one of his claims about how he came into ownership of all this intellectual property.

So he sits there, and asks for telephone, then looks at the lawyer, “ask your question?”

The lawyer asks some scientific or mathematical question.

The industrialist picks up the phone, and dials one of his subordinates, who gives him the answer.

“See! You couldn’t possible solve my conundrum!!” the lawyer says.

Industrialist smiles. “Yeah, but I knew enough to hire the guy who could, and treat him and pay him well enough he WOULD.”

Your comment seems something of a non sequitur. Deus’ managerial skills, such as they are, don’t really enter into his self implication as Super Wise, Super Emotionally Intelligent, etc. Those are traits he appears to be ascribing to himself, and as the author’s commentary indicates that this is a reveal, at least some aspect of his being a super are likely true in-story.

In the tenuously related anecdote you shared, an industrialist admits that he’s not intelligent enough to answer a question, but demonstrates that he has enough resources to employ those who could. That tells us nothing about his own personal intelligence, since he might have been advised to keep such people on staff. You could be making the argument that he’s smart enough to realize his own limitations and to have made preparations to compensate for them, but this displays no more intelligence on his part than anyone who has ever looked anything up on realizing they didn’t have the answer.

Unless Deus does not have super-intelligence, super-wisdom, etc, but instead has Super-Management and went and hired the person with super-intelligence, super-wisdom, super-comprehension, and so forth to work for him.

In which case the comment would mesh nicely.

See, you fall into the same trap that Deus just lined out with your musings. You really think a Singleton,weakly-godlike AGI would be stumped by linguisic ambiguity?

You tell it to create a perfect world. It will now begin defining that term and asking people for feedback. (And most likely come to the conclusion that ‘perfect’ is kinda unaitenable but ‘as good as possible’ is definitely in the cards)

Deus is smart enough that, functionally, his mind is a plot device. He can figure out, or have figured out, basically anything else that might happen in the story, based on the most threadbare information. (This is sort of how his mind MUST work because while Deus is a super-genius, Dave B is not, which means the easiest way to show Deus is smart is to have him basically peek at the script.)

But Sydney is definitely one of the few supers who could foil his plans. First and foremost, she is unpredictable, thanks in part to her severe ADHD that makes any kind of long-term planning essentially irrelevant. Second, however, she also has unpredictable powers. No one knows what she will be capable of as she grows in power, which means creating counter-measures becomes an ongoing problem.

A super intelligence would also recognize that, even if that were functionally possible, it would not be a good thing in the long run to completely override humanity’s fight-or-flight response, which would make them compliant at the cost of damaging their ability to survive.

Great page! Also, and I’m sorry if this has already been mentioned, but what the hell is going on with Deus’ tie? That is not a knot I recognize.

It’s a trinity knot, as has been mentioned several times.

https://www.google.com/search?q=tie+trinity+knot&rlz=1C1CHBF_enUS806US806&oq=tie+trinity+knot&aqs=chrome..69i57j0i512j0i22i30l3j0i10i22i30j0i22i30l4.2405j0j15&sourceid=chrome&ie=UTF-8

Well, super intelligent or not, Deus is intelligent enough not to state that the definition he gives actually applies to him, it is merely indicated to be the case.

*smirks*

The inevitable problem with super-intelligence is that you can be as smart as you want, but your actions are still guided by core-level assumptions about how the world works (or how you think the world *should* work). While one would hope that a super-intelligence would have enough capacity for self-reflection to be cognizant of those assumptions, the fact of the matter is that they exist, and they shape what the intelligence considers to be “good” or “correct.”

We can hope that Deus is someone who values things like human rights, democracy, and the safety and well-being of all sapient life… but we can’t be fully sure. And that’s a problem.

There is an entire field of study out there trying to come up with precise and objective definitions of “benevolent” before some yutz who has read too much Asimov fat-fingers it and accidentally makes the robots destroy humanity “to save it”.

It’s called “friendly AI”.

My own conclusion is that the only realistic approach is to make “AI” stand for “Amplified Intelligence”; Give up on intellectual wish granting genies, and work on ways to make ourselves smarter, thus leaving ourselves in control.

Wanting artificial intelligences that are benign is basically childish, wishing for a return to childhood when you could rely on your parents to act in your best interest in ways you didn’t personally understand. Only a dream childhood, where they parents don’t set limits.

ahhhh but if that intelligence and wisdom is attached to a human, it is still subject to human-y things, like pride and ego and reallly wanting to impress the world while you take over the world

At last we now know what went wrong in the Terminator universe. We see in the code that the word architecture was misspelled as ‘arcitecture’. This lead to a corruption in the compiled code which then defined ALL humans as ‘bad guys’. This spread outward to all subsytems eventually resulting in the planetary destruction of human society.

Programmer notes say that the person who wrote that particular script was someone named ‘S. Connor’.

Honestly there are much bigger config issues than the spelling error, namely that it includes anyone who “likes ‘red'” or “says anything ending in ‘ski'”. Anyone with a red jet ski will be the first against the wall, followed by a large percentage of Canada with their “flappy ear hats”.

I do believe you would be better off and longer lived being a super narcissist tha. Being super benevolent. We, as a world, can handle the Kardashians having super powers, they can get Ricker and more powerful and we will make endless jokes about them, but if one guy solves world hunger you have a disaster on your hands. Think about it, not only do you have to deal with the food, but the production, planting, harvesting, processing, etc, but you have to deal with transportation, national borders, sales, etc. And that’s not including those governments starving their own people for the purposes of power. Do you really think Stalin had all those people killed with bullets?

Worth noting; the real threat of AI isn’t them taking over the world, it’s the existing oligarchs/billionaires (same difference) using them to solidify their existing control over the world. “Too many worry about what AI—as if some independent entity—will do to us. Too few people worry what power will do with AI.” – Zeynep Tufekci.

Deus – “My plans are perfect…every possible variable is accounted for.”

In walks Sydney. “What’s up?”

Somewhere Murphy cringes. “That one’s not on me.”

Sydney is a great force of chaos and not even intentionally.

But perhaps Deus is “someone to whom chaos theory is a simple cascade causality.” :)

This.

Eventually he’ll come to the conclusion that The Mighty Halo is the most important person in the multiverse.

During her 60 day absence was the world measurably different?

One of his charts has a flat line for two months?

Well think about it this entire comic is about her and her “sidekicks” in the superhero universe of course she’s the most important person in the multiverse lol.

Well of course she is.